Chris Padilla/Blog

My passion project! Posts spanning music, art, software, books, and more. Equal parts journal, sketchbook, mixtape, dev diary, and commonplace book.

- Photoshop was a required skill for making sites at the time. The main banner and featured videos section were probably made in MS Paint, tough.

- When a new post went up, I simply edited the HTML by hand. I didn't realize many sites had a CMS behind them! I would have been blown away by WordPress

- A missed opportunity: Those posts could have had an RSS feed!

- Tables layouts! Without flex or grid, this was how most developers were creating placing their content/

- No CSS file, really. Any CSS is done inline or with an HTML tag, like the deprecated

<font />and<center />tags. - No JavaScript, ethier! I would have earned a perfect score for performance in my web core vitals.

- Run a DB locally

- Setup our DB Schema through the Models of our application, not in SQL

- Setup migrations for our application to keep the SQL Schema in sync with our models

[Key]is a Data Annotation that lets Entity Framework know that this is our Primary Key in SQL[Required]does as you'd expect. One thing worth noting: to avoid a non-null error, set the Name value in the constructor.- Tolkein's Fellowship of the Ring Wow, the "One does not simply..." meme doesn't actually come up in the book! Nor does "You have my bow...and my axe!"

- Calvin and Hobbes Vol. 1 These strips are so delightfully dynamic! I can't believe I've slept on these.

- Mickey Mouse, Vol. 2: Trapped on Treasure Island A find at Recycled Books in Denton. The adventure strips from the 30's are so wildly detailed!

- I have a few words written through the Books tag on my blog. My favorite this month: Rob Ingles Singing Lord of the Rings

- Rooms With Walls and Windows by Julie Byrne. Beautiful, moody, home grown solo album.

- This Jungle in Gaming Mix on YouTube is a special kind of nostalgic.

- program music II by KASHIWA Daisuke. Is "Music That Could Be in an Anime Movie" a genre?

- Ted Lassoooooo! 💔

- I'm listening to Cartoonist Kayfabe while I draw. Since comics are a solitary experience, it's so fun to hear a couple of pros geek out over them together!

- Use ASP.NET Core 6

- The West US region wasn't available on Azure, so I went with East US

- To get CI going, I had to select Windows as the server's operating system on Azure

- For Linux, the Github Action Azure uses will generate a web.config file. In my case, I had to add it myself to avoid IIS errors

- My project template uses a

DateOnlyclass, which needed converting for .NET Core 6. Here's what I added to get things going: - Go to school, learn how to hold a pencil properly

- Before I nailed that, I had to learn to write accurately - hitting the tops of my letters on the dotted lines

- Strain a bit to get it right, trading good form for accuracy

- The bad habit solidifies once accuracy became more important than technique

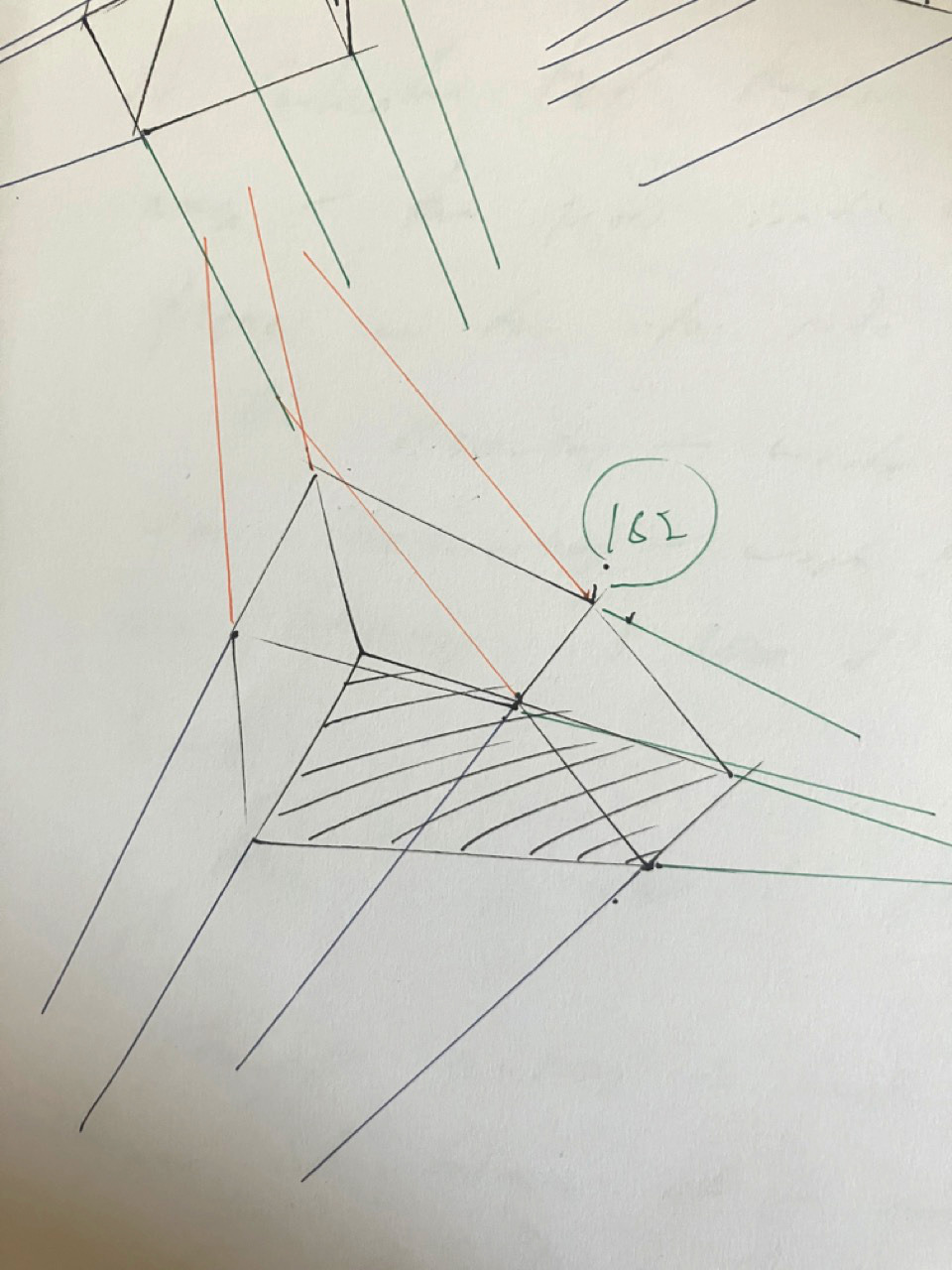

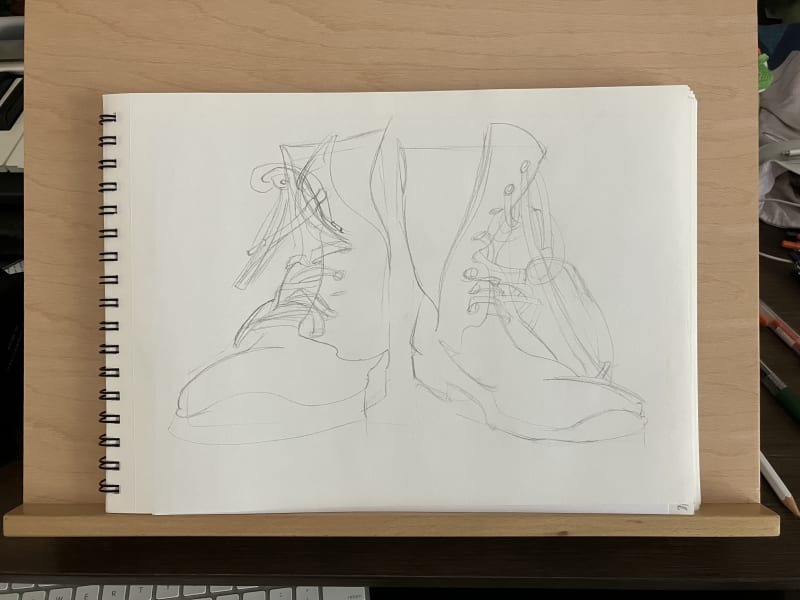

250 Box Challenge and Repetition

I'm doing the 250 box challenge right now. It's the namesake for the website drawabox.com.

The gist: You draw lots of boxes in 3 point perspective. In pen. And you extend your lines at the end to check that the points converge towards a vanishing point.

Brutal.

The exercise looks like this:

The point is to get familiar with perspective. And every now and then I stop and ask "am I doing this wrong? Are there any tips? Am I just not looking at it the wrong way. Maybe if I find another guide..." It's a question I've had for most art. If I find the right strategy, I'll just know how to do the thing.

But, in my experience so far, there is just some intuition that comes from repetition. There's no real strategy aside from "do it again, but try another approach."

On a practical level, what helps me is to plot a dot for where I think a line will go and then ghost it like crazy. I'll intentionally plot one that I know is parallel as a reference if I'm really unsure.

But, for the most part, it's like learning a sport or an instrument: the more you do it, the more your brain will learn the fine-motor control of what you're trying to do.

That's what really struck me! Just like I have to practice scales, I have to warm up on drawing lines.

I kind of wish the challenge was called "Draw 250 boxes (mostly badly!)" since that's the intention behind it!

All this to say: Art, especially at the beginning, is just as much a physical skill as it is a design skill.

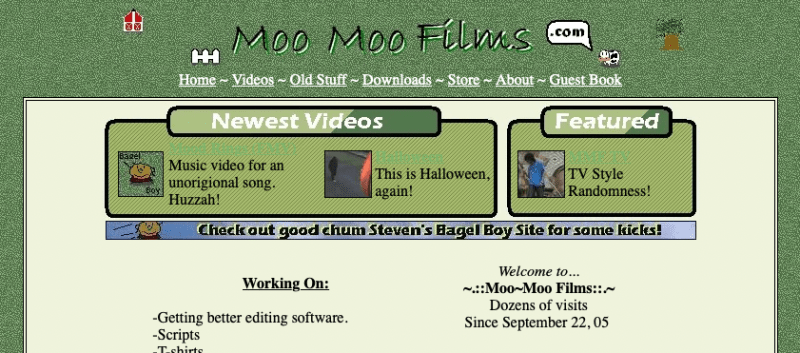

My First Website from 2005

Like many folks born in the early 90s, I grew up with the web. You could say we both grew up alongside each other! I was right on that edge of entering elementary school just as the internet became a household utility.

I was coding my first few webpages at 8 years old, which is wild to think about looking back. I played lots of Neopets at the peak of its heyday. The platform exposed a way for player's to add custom HTML for their shop pages and profiles. With the help of some kid-friendly HTML resources, I was able to add midis, custom cursors, and all sorts of Geocities-era site features!

Fast forward into my teens, on the other side of the early rise in Youtube's popularity. I took up video making and sketch writing for fun. And most of the "pros" (at least, the larger channels) had their own websites.

I was already drawing comics at the time, too. I would pass a four-panel strip around and share them with friends in class. Like a daily syndication, but on notebook paper and in No.2 pencil. I wasn't aware of the rise of webcomics online at the time, but it occurred to me all the same that having a home for those doodles and videos would be a pretty neat idea.

And so, moomoofilms.com was born.

Unfortunately, most of the site has been lost to time. The HTML was coded directly on my hosting service's platform, which shut down years ago. I still have the videos on a hard drive (too juvenile to share publicly, but endearing all the same!) The comics are gone, maybe in a folder back in my parents' attic. And a few small technical experiments and widgets have been lost too. (Moral of the story: Back up your files!)

Thanks to the Way Back Machine, I was able to recover the landing page!

Technical Comparisons

A few fun observations comparing this to modern websites:

<table border="0" cellpadding="2" cellspacing="1" style="border-collapse:" width="505" bordercolor="#666666" valign="top">

<tr>

<td width="503" colspan="2" background="./assets/news.jpeg">

<b><font size="2">Happy Belated New Year! - 1/20/08</font></b>

</td>

</tr>

<tr>

<td width="51">

<img border="0" src="./assets/aimbagelboyfield.jpeg" width="50" height="50"></font>

</td>

<td width="451">

<p align="left">

Good News, everyone!

...

</p>

</td>

</tr>

</table>The Good Ol' Days

My site pre-dated widespread social media. If you wanted an online profile, you had a few options like Livejournal, Blogger, WordPress, etc. Or you did what I did and rolled up your sleeves to put the HTML together.

That lent the internet to so much customization and ownership! Compare that to the cookie-cutter profiles across social media now.

Making sites was a unique, widespread way for a broad audience to be introduced to programming. (Calling HTML programming is a stretch to some, but I say it counts!) I know plenty of developers that got their start customizing MySpace, Tumblr, and WordPress pages.

Today, It's great that anyone can make a profile on any platform and start sharing. I'm nostalgic, though, for the inherent ownership and creativity that was baked into the early days of the web.

Especially for kids! In passing, I think about how my future-kids will develop their own technical literacy. Impossible to say now, things continue to change so quickly. But, so long as there are platforms for them to get their hands dirty, play, and really mess around with what's under the hood, I'm sure there will be a way.

Calvin in the Tree House

Dvorak — New World Finale

So TRIUMPHANT! 🌅 💪

Database Setup and Migrations for Microsoft SQL Server and ASP.NET Core MVC

I know this is old news at this point, but using Microsoft products on Mac (let alone an M1 machine!) is a wild concept to me! It's in the same vein as Superman & Batman in the same movie, or Mario and Sonic in the same game.

I'm getting familiar with ASP.NET Core 6.0 MVC. I've been able to get things up and running with primarily native solutions, much to my surprise! There are a few different paths I've had to take to get all the way, though.

The tl;dr: For .NET, favor the dotnet CLI over the Visual Studio GUI. For SQL, Docker is your friend.

To expand on it, here's how I handled getting my local environment set up to run a local Microsoft SQL Server for my web app:

Overview

The ultimate goal here is:

Here we go!

Running Microsoft SQL Server Locally

The solution on Windows for interacting with the Database is Microsoft's SQL Server Management Studio (SSMS). For Mac and Linux, we'll have to opt for Azure Data Studio.

That takes care of the GUI.

For running a server, this guide gets you most of the way there.

The caveat is that on M1, there's not great support for the image mcr.microsoft.com/mssql/server:2022-latest.

Instead, grab the Azure image:

$ docker run -e "ACCEPT_EULA=Y" -e "MSSQL_SA_PASSWORD=YOURGENERATEDPASSWORD" -p 1433:1433 -d mcr.microsoft.com/azure-sql-edge:latestThe -e "ACCEPT_EULA=Y" is important for accepting the terms and conditions before running.

The rest of the guide will take you through connecting to Azure Data Studio!

Creating Models

Assuming you already have an app template running, we'll add a new Model called "Book" to our project:

// Book.cs

using System;

using System.ComponentModel.DataAnnotations;

namespace LibraryMVC.Models

{

public class Book

{

[Key]

public int Id { get; set; }

[Required]

public string Name { get; set; }

public int isbn { get; set; }

public DateTime CreatedDateTime { get; set; } = DateTime.Now;

public Category(string name)

{

Name = name;

}

}

}A quick run down of some interesting points:

That's all there is to setting up the Schema!

Migrations

If you're used to MongoDB, this is where you would call it a day for setting up the schema. MongoDB doesn't require any enforced schema at the database level.

With SQL, however, we do have more setup to do there.

The excellent thing about this approach, coming from Express and Mongo, is that largely, the source of truth is still in our application. We'll simply setup a migration for SQL to mirror the schema from our Model.

A deeper Migrations Overview from MS is available on their site. For us, let's get into the quick setup:

First, ensure you have the dotnet CLI installed.

We'll use it to install the Entity Framework tool globally:

dotnet tool install --global dotnet-ef --version 6.0.16With that, you can then run this command to migrate:

dotnet ef migrations add AddBookToDatabaseAddBookToDatabase is what the migration will be named. You can call it whatever you like.

You may need to install extra Nuget packages before the command goes through:

Your startup project 'LibraryMVC' doesn't reference Microsoft.EntityFrameworkCore.Design. This package is required for the Entity Framework Core Tools to work. Ensure your startup project is correct, install the package, and try again.With the SQL server connected, Entity Framework will look at the models in your app, compare what's in the DB, and create the DB with appropriate tables and columns based on your models directory.

To verify all worked, you can check Azure Data Studio for the data, and look for a "Migrations" folder at the root of your application.

And that's it! All set to fill this library up with books! 📚

The Haps - June 2023

Summer time!!!

Blogging & Dev

Taking a slower pace with blogging, but still sharing my adventures in C# and .NET!

You can catch up with my tech projects through the Tech tag on my blog.

Music

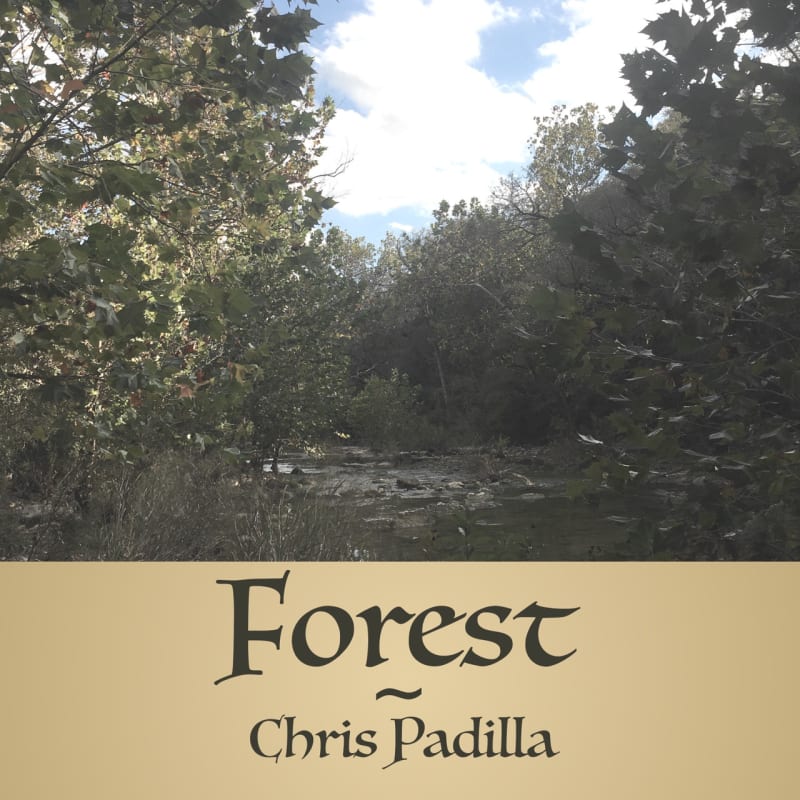

I released Forest a while back! An experiment in playing with a few sounds from Ocarina of Time and Chrono Trigger!

I'm digging deep into learning finger style guitar. My favorite so far was this cowboy waltz I improvised on my new acoustic.

You can see what I've shared so far through the Music tag on my blog. I'm also sharing recordings on Instagram.

Drawing

I finished my fourth sketch book! I'm starting to not treat them as precious and am really drawing loosely in them. It's great, very liberating!

My routine at the moment is studying other artists, drawing from imagination, and doing perspective / figure drawing exercises. Both from Proko and drawabox.

You can see what I've made so far through the Art tag on my blog. I'm also sharing drawings on Instagram.

Words and Sounds

📚

🎧

📺

Life

It's hot. I'm cold blooded. So I'm in my element! ☀️

Had my folks visit for Mother's day!! We had a good time exploring a few museums in Dallas and eating good food.

👋

Turkey Groom

Faber — Whispers of the Wind

Sooooo pretty. Lots of fun cross-hands action going on!

This was one of those when I started piano lessons where I thought "I didn't know even the EASY pieces could sound so beautiful!" 🍃

Deploy New Projects Before Development (.NET Core & Azure)

That's a great bit of advice I got from a friend early on as a developer. This is especially true if your app goes beyond the hello world boilerplate.

For example, a couple of years ago I needed this advice because I was looking to create a MERN stack app with Express and React hosted on different services.

This week, I was in the same situation. I wanted to deploy a ASP.NET Core application that serves React on the client to Azure.

The road there involved starting projects with different versions of .NET Core, adding a missing config file, and finding the correct region and OS settings to get my app up on Azure.

By the way, the sweet spot for my solution was:

// web.config

<?xml version="1.0" encoding="utf-8"?>

<configuration>

<location path="." inheritInChildApplications="false">

<system.webServer>

<handlers>

<add name="aspNetCore" path="*" verb="*" modules="AspNetCoreModuleV2" resourceType="Unspecified" />

</handlers>

<aspNetCore processPath="dotnet"

arguments=".\MyApp.dll"

stdoutLogEnabled="false"

stdoutLogFile=".\logs\stdout"

hostingModel="inprocess" />

</system.webServer>

</location>

</configuration>// WeatherForecast.cs

public sealed class DateOnlyJsonConverter : JsonConverter<DateOnly>

{

public override DateOnly Read(ref Utf8JsonReader reader, Type typeToConvert, JsonSerializerOptions options)

{

return DateOnly.FromDateTime(reader.GetDateTime());

}

public override void Write(Utf8JsonWriter writer, DateOnly value, JsonSerializerOptions options)

{

var isoDate = value.ToString("O");

writer.WriteStringValue(isoDate);

}

}// Program.cs

builder.Services.AddControllers()

.AddJsonOptions(options =>

{

options.JsonSerializerOptions.Converters.Add(new DateOnlyJsonConverter());

});You can imagine that, after putting hours into an application, only to realize that all of a sudden it's not finding its way on the web — that can be a major hassle. So getting the simplest version of the app possible, but with all the key components still in play, is a great first step in an iterative process.

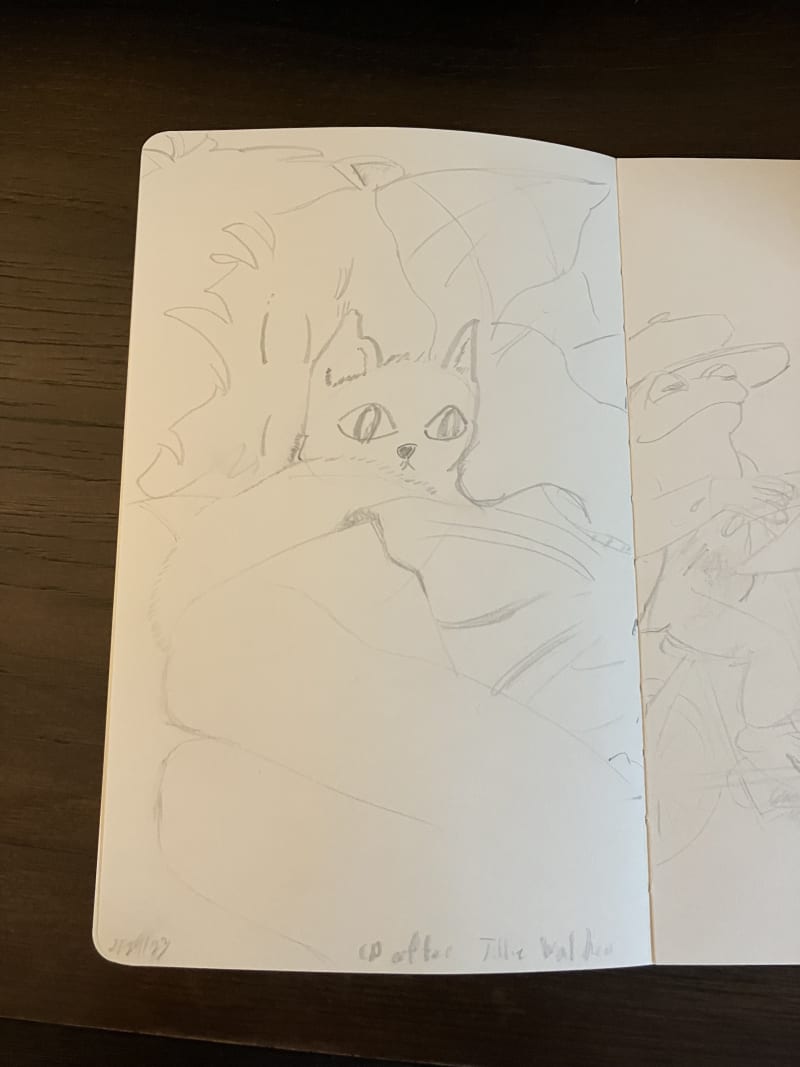

Tillie Walden Study

Line study after the amazing Tillie Walden from her book "Are You Listening." So wildly beautiful, you gotta go see the colors!

Also, a peak from Toad on the next page! 🐸

Aquarium Sketches

E Minor Cowboy Waltz 🤠

Improvising a Dosey Doe in the ol' 3/4 🌵

Polymorphism Through Abstract Classes in C#

One of the 3-7 pillars of Object Oriented Programming (number depending on who you talk to) is Polymorphism.

What is polymorphism?

When designing classes, it's sometimes helpful to have parent-children relationships. A "Vehicle" class could have attributes like "Passengers," "Color," and "Speed". It can have methods like "FuelUp", "LoadPassangers", etc.

Deriving from that, we can then have child classes for Cars and Boats.

Here's what that would look like in C#:

namespace ChrisGarage

{

public class Vehicle

{

private List<string> Passengers = new List<string>();

public readonly string Color;

public void LoadPassenger(string passenger)

{

// . . .

}

public Vehicle(string color)

{

if (String.IsNullOrEmpty(color))

{

throw new ArgumentException("Color String cannot be null");

}

Color = color;

}

}

public class Car : Vehicle

{

public Car(string color) : base(color) { }

}

public class Boat : Vehicle

{

public Boat(string color) : base(color) { }

}

}Easy!

So that's inheritance, but it's not quite polymorphism. Polymorphism is the manipulation of the inherited classes to suit the needs of the children classes.

Continuing with the vehicle example, it's safe to say that all vehicles move. They'll need a "Move" method.

But a car does not move in the same way a boat moves!

We could define those separately on the children. But it's safe to say, if it's a vehicle, we expect it to move. We want the vehicles to conform to that shape.

Why? Say we're iterating through a list of vehicles and running the move method on them. We want to be sure that there is a move method.

We can enforce that through the abstract and override keywords.

// Declaring class as abstract, in other words, incomplete.

public abstract class Vehicle

{

private List<string> Passengers = new List<string>();

public readonly string Color;

public void LoadPassenger(string passenger)

{

// . . .

}

public Vehicle(string color)

{

if (String.IsNullOrEmpty(color))

{

throw new ArgumentException("Color String cannot be null");

}

Color = color;

}

// Adding the move abstract

public abstract void Move();

}

public class Car : Vehicle

{

public Car(string color) : base(color) { }

// Writing custom car algorithm

public override void Move()

{

Console.WriteLine("Drive");

}

}

public class Boat : Vehicle

{

public Boat(string color) : base(color) { }

// Writing custom Boat algorithm

public override void Move()

{

Console.WriteLine("Sail");

}

}On the parent, we set the class as abstract and include an abstract method to signal that we want this method to be completed by developers designing the derived classes.

On the children, we use the override keyword and do the work of deciding how each vehicle should move.

A note on abstract: If you wanted to provide some base implementation, you could use the virtual keyword instead and write out your procedure. Virtual also makes overriding optional.

You then can decide to call the base class's Move method, the way that we do here for the Draw method:

public class Rectangle : Shape

{

public override void Draw()

{

base.Draw();

Console.WriteLine("Rectangle");

}

}Lessons From a Year of Blogging

My domain renewed and my blog turned a year old! What started as a fun technical project has turned into a wildly gratifying medium for expression. I'm starting to think it might even become my life's work!

I've learned a ton in the process of writing a bit each day. The first draft of this has a list of bullet points a mile long. Here are some of the most salient takeaways, and why I'll keep writing:

Performing on a Stage

The process truly is the product. The greatest gift of sharing online isn't really so that thousands of strangers can see what you create. It's in the making.

But there's something to performing on stage that's different than the practice room.

It's the feeling of elevation and a sense of audience that can really stir the spirit. I write for myself everyday in a journal. But I don't quite reach the same clarity of thought or spiritual sense of communing with the Great Creator.

Put another way — sure, in school you might doodle on your math homework. But when given a canvas that will be framed on a wall, there's a calling to create something more.

Maybe that's overstating things here. It's just a blog, after all.

But the feeling is there. It was the same with music. An audience of 3 is still an audience in a concert hall. Any audience is enough to complete the performance.

Clarity

I thought this was just a point for software, but it goes beyond that.

Writing about code helps clarify thinking around it. That goes for writing what I'm learning, topics reading about, and projects I've built. It even goes for testing.

Turns out, the same is true for everything else. Writing a post about creativity solidifies my thoughts around it. I've had moments in the studio where I was stuck on something, but remembering "hey, didn't you write a blog post about practicing slow?" actually came up.

There's the technical side of it, too. Wanting to record a guitar tune for this site makes my practice on it that much more focused. Same for art — having a platform to share pushes me to want to refine my line quality and try new skills.

Kleon makes a good point that writing helps him figure out what he has to say. The more I write, the more I feel a writing voice coming through. I become aware of what's valuable in what I have to say through — well — saying things.

Long Term Conversations

Alan Jacobs mentions that the best part of blogging is revisting themes and topics.

Everyone who writes a blog for a while knows that one of the best things about it is the way it allows you to revisit themes and topics. You connect one post to another by linking to it; you connect many posts together by tagging. Over time you develop fascinating resonances, and can trace the development of your thought.

I'm just at the start, but I'll echo that already it's been fascinating holding a long term conversation with myself and other blogs and books.

It's Surprisingly Expressive

It's a great answer to the equation of Impression Munis Expression Equals Depression

You know how there's probably that thing you're really into that's pretty niche (say, vintage radio collecting?) Or maybe the work you do is too technical to be good small talk fodder? Or maybe you just have those sensations, feelings, and impressions that are too nuanced to express in a passing conversation? Yeah, writing is good for that.

What makes a personal blog interesting is bringing in the full spectrum of what tickles your brain. Somedays it is executing SQL queries through Python, other days it's musings on books, other days it's leaving memories from a fun weekend. Some of my favorite tech blogs have a wild amount of personality shining through them. It's fun to have many pots boiling!

All creative practices are actually really similar once you get past the details of medium. If you want an outlet but don't feel like you're creative ("I couldn't sing my way out of a paper bag!", "I can only draw stick figures!",) I think you ought to try blogging. You're a Mozart in your own way of speaking and living. Words are a pretty great way to capture your impressions.

👋

A year is just the start. Many of the folks I admire are prolific and diverse bloggers. If you've read, commented, emailed, or been a part of it in anyway — Hey! Thanks! Here's hoping for many more years!

Holding With a Loose Grip

I have a nasty habit of holding my pencil with a mean pinch. I can't write for too long because my palm will get sore.

It's something I'm being mindful of now that I'm drawing regularly.

How it probably happened:

It's easy to imagine that in other disciplines, and on a much more serious scale. On sax, I knew folks that developed tendonitis. I've heard of artists with Focal Dystonia. Even when I taught marching band, I saw students push themselves to overexertion.

So I'm stopping to ask myself: What if it were flipped? What if the way I did things was more important than the end result?

Here's how it's going so far with drawing: comically!

My lines were already pretty inaccurate, now they're hilariously so! It's both frustrating and...fun!?

It's been a weird cheat for another benefit. Focusing more on how I'm holding the pencil has made it so I can let go of the result. In other words, drawing is even more playful than it was before!

I'm already translating this to guitar and piano to pretty great effect. Things are moving more slowly, but I don't leave either instrument with any cramps. Since the physical process was more pleasurably, lo and behold, I'm feeling even more bouncy and light after leaving a session.

W.A. Mathieu has a great essay in The Listening Book that advocates for practicing slowly. The message is different: practice slowly to learn quickly. But the sentiment is similar:

Lentus Celer Est

This is bogus Latin for Slow (lentus) Is Fast (celery). Write it large somewhere. It means you cannot achieve speed by speedy practice. The only way to get fast is to be deep, wide awake, and slow. When you habitually zip through your music, your ears are crystallizing in sloppiness.

Yet almost everyone practices too fast, their own music as well as others'. We want to be the person who is brilliant. This desire is compelling, and it can become what our music is about.

Pray to Saint Lentus for release from zealous celerity. Pray for the patience of a stonecutter. Pray to understand that speed is one of those things you have to give up—like love—before it comes flying to you through the back window.

Does it have to stop there? There's a big life metaphor here, I bet! Say: setting up your days where you actually enjoy them, have energy to do the most important things, and in a way that sustains relationships.

I'm going to keep trying: Holding days and practices with a loose grip.