Chris Padilla/Blog

My passion project! Posts spanning music, art, software, books, and more. Equal parts journal, sketchbook, mixtape, dev diary, and commonplace book.

- Call a script with github-script

- From said script, scan for the latest post.

- Grab the relevant content (an image or video link)

- Integrate with my messaging system of choice, either:

- Twilio to send a text

- Amazon Simple Email Service

- Navigating to the correct page by button click (it's a SPA, so a necessary step instead of by URL directly.)

- Grabbing the column index for row values in the table header

- Iterating through the rows and extracting the data

Lazy Sunday Improv

Pup at Twilight

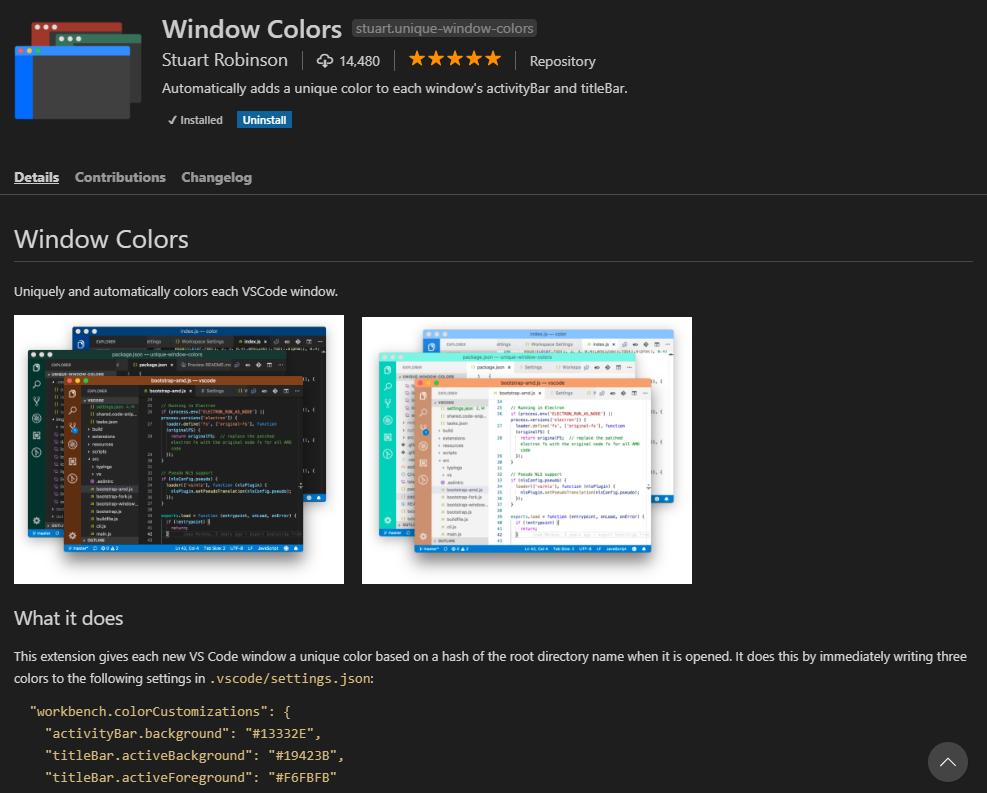

Customizing Title Bar Colors in VS Code

We're doing a lot of migration from one repo to another at work. And it's easy to mix up which-window-is-which when looking at two files that are so similar!

Enter custom Title Bar Colors! Not only does doing this look cool, it's actually incredibly practical!

I first came across this in Wes Bos' Advanced React course, and it's sucinclty covered on Zachary Minner's blog: How to set title bar color in VS Code per project. So I'll let you link-hop over there!

If you don't want to pick your colors, looks like there's even a VS Code plugin that will do this automatically for you! (Thank you Stack Overflow!)

Blue Bossa

The Boy and the Heron

Regex Capture Groups in Node

I've been writing some regex for the blog. My blog is in markdown, so there's a fair amount of parsing I'm doing to get the data I need.

But it took some figuring out to understand how I could get my capture group through regex. Execute regex with single line strings wasn't a problem, but passing in a multiline markdown file proved challenging.

So here's a quick reference for using Regex in Node:

RegExp Class

new RegExp('\[([^\n\]]+)\]', 'gi').exec('[a][b]')

// ['a']Returns only the capture groups. Oddly, there is a groups attribute if you log in a Chrome browser, but it's undefined in the above example.

The issue here comes with multiline string. If my match were down a few lines, I would get no result.

You would think to flip on the global flag, but trouble arises. More on that soon.

RegExp Literal

/\[(a)\]/g.exec('[a][b]')

// ["[a]", "a"]Returns the full match first, then the grouped result. Different from the class!

I was curious and checked it was an instance of the RegExp class:

/fdsf/ instanceof RegExp

// trueAnd so it is! Perhaps it inherits properties, but changes some method implementations...

String Match

'[a][b]'.match(/\[(a)\]/g)

// ["[a]"]You can also call regex on a string directly, but you'll only get the full match. No capture group isolation.

Again, problems here when it comes to multiline strings and passing the global flag.

The Global Option

The Global option interferes with the result. For example: Passing a global flag to the RegExp class will actually not allow the search to run properly.

The only working method I've found for multi line regex search with capture groups is using the Regexp literal.

My working example:

const artUrlRegex = /[.|\n]]*\!\[.*\]\((.*)\)/;

const urls = artUrlRegex.exec(postMarkDown);

if(urls) {

const imageUrl = urls[1];

. . .

}Surprisingly complicated! Hope this helps direct any of your future regex use.

Fly Me To The Moon

Walking the Bass, flying to the moon 🌙

Oatchi

Triggering Notifications through Github Actions

My Mom and Dad are not on RSS (surprising, I know!) Nor are they on Instagram or Twitter or anywhere! But, naturally, they want to stay in the loop with music and art!

I've done a bit of digging to see how I could set up a notification system. Here's what I've got:

This blog is updated through pushing markdown files to the GitHub repo. That triggers the build over on Vercel where I'm hosting things. So, the push is the starting point. I can:

The config for github scripts is very straightforward:

name: Hey Mom

on: [push]

jobs:

hey-mom:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v3

- uses: actions/github-script@v7

with:

script: |

const script = require('./.github/scripts/heyMom.js')

console.log(script({github, context}))The one concern I have here is external dependencies. From my preliminary research, it's best to keep the GitHub Action light without any dependencies. Mostly, I want it to find the content quickly, and send it off so the website can finish up the deploy cycle.

This would be a great case for passing that off to AWS Lambda. The lambda can take in the link and message body, load in dependencies for integrating with the service, and send it off from there.

Though, as I write this now, I'm forgetting that Vercel, of course, is actually handling the build CI. This GitHub action would be separate from that process.

Well, more to research! No code ready for this just yet, but we'll see how this all goes. Maybe the first text my folks get will be the blog post saying how I developed this. 🙂

What is Divine

Vocation, even in the most humble of circumstances, is a summons to what is divine. Perhaps it is the divinity in us that wishes to be in accord with a larger divinity. Ultimately, our vocation is to become ourselves, in the thousand, thousand variants we are.

From Finding Meaning in the Second Half of Life by James Hollis.

Stella By Starlight

That great symphonic theme... That's Stella by starlight~

Valentine

Automation & Web Scraping with Selenium

I had a very Automate The Boring Stuff itch that needed scratching this week!

I have an office in town that I go to regularly. I'm on a plan for X number of visits throughout the year. The portal tells me how many visits I have left, but there's a catch. My plan also includes a special one-on-one consult doesn't let me know when I can redeem two special one-on-one consults as part of the package, ideally scheduled evenly through the year.

I could just put in the calendar to do this on reasonable months – April and September. But what if I kick up my visit frequency? What if I'm the business owner and I want to track this for multiple clients? What if I just want to play around with Selenium for an evening?

So here's how I automated cacluating this through the business's portal!

Selenium

If you've scraped the web or done automated tests, then you're likely familiar with Selenium. It's a package that handles puppeteering the browser of your choice through web pages as though a user were interacting with them. Great for testing and crawling!

If I were just crawling public pages, I could reach for Beautiful Soup and call it a day. However, the info I need is behind a login page. Selenium will allow me to sign in, navigate to the correct pages, and then grab the data.

Setup

Setting up the script, I'll do all of my importing and class initialization:

import os

import re

from dotenv import load_dotenv

from selenium import webdriver

from selenium.webdriver.support.ui import WebDriverWait

from selenium.webdriver.common.by import By

from selenium.common import exceptions

from selenium.webdriver.support import expected_conditions

from datetime import datetime

class Collector:

def __init__(self):

load_dotenv()

options = webdriver.ChromeOptions()

options.add_experimental_option("detach", True)

self.driver = webdriver.Chrome(options=options)

self.login_url = "https://biz.office.com/login"

self.account_url = "https://biz.office.com/account"

def run(self):

self.handle_login()

visit_history = self.get_visit_history()

self.get_remaining_visits_until_package_renewal(visit_history)

if __name__ == '__main__':

c = Collector().run()I'm storing my login credentials in an .env file, as one should! The "detatch" option passed in simply keeps the window open after the script runs so I can investigate the page afterwards if needed. The URLs are fictitious here.

So, all set up! Here's looking at each function one at a time:

Login

def handle_login(self):

AUTH_FIELD_SELECTOR = "input#auth_key"

LOGIN_BUTTON_SELECTOR = "button#log_in"

PASSWORD_FIELD_SELECTOR = "input#password"

self.driver.get(self.login_url)

self.wait_for_element(AUTH_FIELD_SELECTOR, 10)

text_box = self.driver.find_element(By.CSS_SELECTOR, AUTH_FIELD_SELECTOR)

login_button_elm = self.driver.find_element(By.CSS_SELECTOR, LOGIN_BUTTON_SELECTOR)

text_box.send_keys(os.getenv('EMAIL'))

login_button_elm.click()

# Password Page

self.checkout_page(PASSWORD_FIELD_SELECTOR, 10)

self.driver.find_element(By.CSS_SELECTOR, PASSWORD_FIELD_SELECTOR).send_keys(os.getenv("PW"))

self.driver.find_element(By.CSS_SELECTOR, LOGIN_BUTTON_SELECTOR).click()Writing with Selenium is nice because of how sequential and fluid writing the code is. The code above lays out quite literally what's happening on the page. Once I've received the page and verified it's opened, we're just grabbing the elements we need with CSS selectors and using interactive methods like send_keys() to type and click() to interact with buttons.

Something worth pointing out is wait_for_element. It's mostly a wrapper for this Selenium code:

WebDriverWait(self.driver, 30).until(

expected_conditions.presence_of_element_located((By.CSS_SELECTOR, element_selector))

)This is a point to ask Selenium to pause until a certain element appears on the screen. Cumbersome to write every time, so nice to have it encapsulated!

Get Visit History

Once logged in, it's time to navigate to the page with my visit history. The page contains a table with columns for visit date, session name, and the member name (me!) So what I'm doing below is:

def get_visit_history(self):

visits = []

ACCOUNT_HISTORY_PAGE_BUTTON_SELECTOR = 'a[href="#history"]'

headers_index = {}

self.driver.find_element(By.CSS_SELECTOR, ACCOUNT_HISTORY_PAGE_BUTTON_SELECTOR).click()

self.checkout_page(ACCOUNT_HISTORY_PAGE_BUTTON_SELECTOR, 10)

headers = self.driver.find_elements(By.CSS_SELECTOR, '#history tbody > tr > th')

for h, header in enumerate(headers):

header_text = header.get_attribute('innerText')

if re.search(r'date', header_text, re.IGNORECASE):

headers_index['date'] = h

elif re.search(r'session', header_text, re.IGNORECASE):

headers_index['session'] = h

elif re.search(r'member', header_text, re.IGNORECASE):

headers_index['member'] = h

rows = self.driver.find_elements(By.CSS_SELECTOR, '#history tbody tr')

for row in rows:

row_dict = {}

cells = row.find_elements(By.CSS_SELECTOR, 'td')

if not len(cells):

continue

if 'date' in headers_index:

row_dict['date'] = cells[headers_index['date']].get_attribute('innerText')

if 'session' in headers_index:

row_dict['session'] = cells[headers_index['session']].get_attribute('innerText')

if 'member' in headers_index:

row_dict['member'] = cells[headers_index['member']].get_attribute('innerText')

visits.append(row_dict)

return visitsCalculate Remaining Visits

From here, it's just math and looping!

Plan length and start date are hardcoded below, but could be pulled from the portal similar to the method above.

With that, I'm counting down remaining visits if an entry in the visits table is for a date beyond the start date and if it's a "plan visit". Once we've passed the date threshold, we can break the loop.

For the math – I calculate session values by dividing the full plan length, and reducing those values from the remaining visits. If there's a positive difference, then it's logged as X number of days until the consult.

def get_remaining_visits_until_package_renewal(self, visit_history):

PLAN_LENGTH = 37

START_DATE = datetime(2024, 1, 1).date()

remaining = PLAN_LENGTH

for visit in visit_history:

stripped_date = visit['date'].split('-')[0].strip()

if not re.search('plan visit', visit['session'], re.IGNORECASE):

continue

elif datetime.strptime(stripped_date, '%B %d, %Y').date() >= START_DATE:

remaining -= 1

else:

break

interval = math.floor(PLAN_LENGTH / 3)

first_session = interval * 2

second_session = interval

visits_until_first_session = remaining - first_session

visits_until_second_session = remaining - second_session

if visits_until_first_session > 0:

print(f'{visits_until_first_session} visits until first consult')

elif visits_until_second_session > 0:

print(f'{visits_until_second_session} visits until second consult')

else:

print(f"All consults passed! {remaining} remaining visits")

return remainingDone!

So there you have it! Automation, scraping, and then using the data! A short script that covers a lot of ground.

A fun extension of this would be to throw this up on AWS Lambda, run as a cron job once a week, and then send an email notifying me when I have one or two visits left before I should schedule the consult.

Will our lone dev take the script to the cloud?! Tune in next week to find out! 📺

Childhood's End

Thinking a great deal lately about the obstacles to play in art. Namely, though, how they aren't so much obstacles as much as they are just part of the journey.

From Stephen Nachmanovitch's Free Play:

Everything we have said so far should not be construed merely as an indictment of the big bad schools, or the media, or other societal factors. We could redesign many aspects of society in a more wholesome way—and we ought to—but even then art would not be easy. The fact is that we cannot avoid childhood's end; the free play of imagination creates illusions, and illusions bump into reality and get disillusioned. Getting disillusioned, presumably, is a fine thing, the essence of learning; but it hurts. If you think that you could have avoided the disenchantment of childhood's end by having had some advantage — a more enlightened education, more money or other material benefits, a great teacher — talk to someone who has had those advantages, and you will find that they bump into just as much disillusionment because the fundamental blockages are not external but part of us, part of life. In any case, the child's delightful pictures of trees mentioned at the beginning of this chapter would probably not be art if they came from the hands of an adult. The difference between the child's drawing and the childlike drawing of a Picasso resides not only in Picasso's impeccable master of craft, but in the fact that Picasso had actually grown up, undergone hard experience, and transcended it.

Slaughter Beach, Dog – Acolyte

Whistling and plucking along. My midwest emo phase continues!