Chris Padilla/Blog

My passion project! Posts spanning music, art, software, books, and more. Equal parts journal, sketchbook, mixtape, dev diary, and commonplace book.

- When using semantic classnames, you are tightly coupling your css with the html structure of your element. The BEM system makes refactoring difficult.

- Naming things is one of the hardest parts of software. Here, you're not naming anything! You're simply styling with utility classes.

- Cascading styles as well as adjusting classnames can add to your css. With tailwind, you're using utility classes to apply the same styles with minimal customization. That makes the css more maintainable

- Similarly, codebases gets bloated with css doing the exact same thing. my

input__wrappermay be doing the same positioning as mybutton__wrapperclass. - Colocating CSS and HTML (and JS if using React, Vue, etc.), but without the performance hit.

- Integrate code with design standards

- Bring brand cohesion to an app

- Eliminate decision fatigue from having to design on the fly

- Zero Customizability: We built this to strongly enforce consistency for ourselves.

- Pre-Selected Variations: We’ve got accordions in three different colors.

- BYO Theme: We’ll give you a skeleton that loosely achieves the pattern and you apply the styles to your liking.

- describe Is a wrapper for related tests.

- it is the keyword for a test. Essentially saying "Test this procedure."

- request is doing just that, sending and http request.

- expect is our assertion. We are expecting our status to equal 200 for this link.

- Links with and without "Preloading"

Font-Displaysettings "Block" and "Swap"- Writing as clarifying thinking

- Abstraction bringing thought to greater abstracts

- Can't help but think of this XKCD comic on python.

- The wildest package you'll ever pip install

- So now what do we write about?

- I've slowed as the nitty gritty coding details begin to become less relevant. The search begins for new conversations worth having. Around the product? Simply go up an abstraction, talk more design?

- Editing... Speed gains are huge in development. Though, some of that is lost to the bottleneck of review and revision. Claude code seems to be the best tool in that human-in-the-loop and consistent opportunities for feedback are the primary mode of operation.

Debussy - Rêverie

💭

Little snippet from the gorgeous Debussy piece.

Which was — fun fact — played in the final Anthony Hopkins scene in West World Season 1! Alongside a Radiohead string quartet cover, no less!

Terry Pratchett and Real Life Inspirations

It never really occurred to me growing up that I could work in software.

I grew up playing video games and when I finished them, credits would roll. A flood of names would dash across the screen.

My parents explained to me after watching a movie that it took all these people to make a film. And there was a realness to that.

But, with video games - growing up with Nintendo, all the names were Japanese. And developers - unlike firefighters, police officers, and construction workers - don't have as clear an answer to Richard Scarry's question "What do people do all day?". As far as I know - "Well wow, you have to be Japanese to make games!!"

It wasn't until I met living, breathing people that were making a career of working on the web that it clicked. And that's even after I had done it as a hobby since I was a kid!

Pratchett and Inspiration

I mentioned I'm reading Terry Pratchett's biography. I even wrote a bit about how he gains great satisfaction from the cycle of being a reader and writing for readers.

To continue the conversation - sharing things online helps a great deal. Here's further validation. This time, from the angle of the importance of having heroes that feel real.

Pratchett talks about writing fan mail to Tolkien in his biography, but I like this heartfelt clip from From A Slip of the Keyboard: Collected Non-Fiction :

[W]hen I was young I wrote a letter to J.R.R. Tolkien, just as he was becoming extravagantly famous. I think the book that impressed me was Smith of Wootton Major. Mine must have been among hundreds or thousands of letters he received every week. I got a reply. It might have been dictated. For all I know, it might have been typed to a format. But it was signed. He must have had a sackful of letters from every commune and university in the world, written by people whose children are now grown-up and trying to make a normal life while being named Galadriel or Moonchild. It wasn’t as if I’d said a lot. There were no numbered questions. I just said that I’d enjoyed the book very much. And he said thank you. For a moment, it achieved the most basic and treasured of human communications: you are real, and therefore so am I.

Just like my last post, I can't help but think about the internet. How ridiculously lucky are we to have much closer access to artists, writers, developers — all world class?

Close To Home

It's the humanizing element that can solidify the inspiration, though. It's one thing to hear stories of born prodigies, and another to hear stories of people that started from a place closer to home.

Granny Pratchett told young Terry growing up about G. K. Chesterton. She loaned him Chesterton's books, which he ate up and quickly became a fan. Most importantly, though, she told Terry that Chesterton was a former resident of their town Beaconsfield.

[W]hat seemed to have grabbed Terry most firmly in these revelations was the way they located this famous writer on entirely familiar ground – in Beaconsfield, at the same train station where Terry had but recently laid cons on the tracks for passing trains to crush... It brought with it the realization that, for all that one might very easily form grander ideas about them, authors were flesh and blood and moved among us... And if that were the case, then perhaps it wasn't so outlandish, in the long run, to believe that one might oneself even become an author.

I think we all have stories like this. Some of us may even be luckier - our teachers were this living, breathing validation that what you dream of doing can be done by you.

A similar sentiment from my last post: It's wildly important for you to share your work online. Maybe, even, it's just as important that you do the work you're called to do period. Even if you're not on as global a scale as a published author. For many of us, it's the section-mates in band, the english teachers, the supervisor at work, or even the friends we know from growing up that help solidify dreams into genuine possibilities.

Not to mention the impact you could have, just by sending an email.

Where Does Tailwind Fit In Your App?

If you follow Brad Frost's brilliant atomic model for design systems, Tailwind is at the atomic level.

Tailwind is such an interesting paradigm shift from the typical approach to CSS. I'm still early into it, but I can say I'm starting to see where it shines.

We're in a prototyping phase of implementing a new design system. We're reaching for tailwind to streamline a landing page design and simultaneously flesh out the reusable points of our system — fonts, color, spacing, and the like.

We're bringing this in, though, to a full blown full stack application that's already using styled components for one portion and SCSS for another.

So that's where the question comes from - among all that, where does Tailwind fit? What problem is it really solving?

Tailwind Pros

Once you get past the funny approach to using it, here are the pros, some pulled from Tailwind's docs:

Not to mention, of course, that just like CSS custom properties, it's a way of working within a design system. Once you have your customizations set, spacing, colors, and breakpoints are all baked in to these utility classes.

One nice feature that's not mentioned right out the gate is that you can inline your psuedo-classes, as well. Here's an example from the docs:

<button class="bg-violet-500 hover:bg-violet-600 active:bg-violet-700 focus:outline-none focus:ring focus:ring-violet-300 ...">

Save changes

</button>It's Atomic, not Molecular

Early on, we came across this issue:

<div class="mt-3 flex -space-x-2 overflow-hidden">

<img class="inline-block h-12 w-12 rounded-full ring-2 ring-white" src="https://images.unsplash.com/photo-1491528323818-fdd1faba62cc?ixlib=rb-1.2.1&ixid=eyJhcHBfaWQiOjEyMDd9&auto=format&fit=facearea&facepad=2&w=256&h=256&q=80" alt=""/>

<img class="inline-block h-12 w-12 rounded-full ring-2 ring-white" src="https://images.unsplash.com/photo-1550525811-e5869dd03032?ixlib=rb-1.2.1&auto=format&fit=facearea&facepad=2&w=256&h=256&q=80" alt=""/>

<img class="inline-block h-12 w-12 rounded-full ring-2 ring-white" src="https://images.unsplash.com/photo-1500648767791-00dcc994a43e?ixlib=rb-1.2.1&ixid=eyJhcHBfaWQiOjEyMDd9&auto=format&fit=facearea&facepad=2.25&w=256&h=256&q=80" alt=""/>

<img class="inline-block h-12 w-12 rounded-full ring-2 ring-white" src="https://images.unsplash.com/photo-1472099645785-5658abf4ff4e?ixlib=rb-1.2.1&ixid=eyJhcHBfaWQiOjEyMDd9&auto=format&fit=facearea&facepad=2&w=256&h=256&q=80" alt=""/>

<img class="inline-block h-12 w-12 rounded-full ring-2 ring-white" src="https://images.unsplash.com/photo-1517365830460-955ce3ccd263?ixlib=rb-1.2.1&ixid=eyJhcHBfaWQiOjEyMDd9&auto=format&fit=facearea&facepad=2&w=256&h=256&q=80" alt=""/>

</div>Lots of repeating styles. I assumed there would be a way to component-ise this through Tailwind. They provide it as an option through the @apply directive on a custom class. However, it's largely not recommended to do this.

The docs argue that "Hey, this is what React is for!"

So there's a clear delineation from Tailwind fitting anywhere higher than the atomic level - higher than a button or input field.

Tailwind is a powerful design system tool that's serving that very particular, atom-level purpose in your application. Taking the "low level" css writing to a greater abstraction, and solving the same co-location problem that CSS-in-JS solves.

We may end up dropping Tailwind from our project in favor of making our own custom classes, but I'll be interested to try Tailwind out a bit more in personal projects.

What, Why, and How of Design Systems

I'm involved in setting up a new design system on a project. From a high level, I've been taking a look at what that means in a React application.

Really quick, here's a succinct definition: "A design system is a collection of reusable components, guided by clear standards, that can be assembled together to build any number of applications."

The aim of a design system is mainly to:

There's A Spectrum

For most developers, the first thing that comes to mind would be Bootstrap. You get an accordion, you get buttons, some basic layout components, and many other goodies. They integrate with React, or you can use plain HTML and CSS.

Bootstrap, however, is on a spectrum of design systems.

Chris Coyier demonstrates this pretty well through asking "Who are design systems for?". The extremes of the spectrum are based on customizabiliy, according to other, more famouse Chris:

Material UI by Google, for example, pretty rigidly feels like a Google product.

But then, you have the list of "Classless CSS" files that just give you some basic stylings for the html elements. And those are a design system too.

Arguable, the browser defaults for html tags are a design system!

Where To Go on the Spectrum

So how heavy handed a design system is depends on the project and team. And if you're developing your own, it's something that's developed organically over time.

A loose, light design system may need more rigidity as the team and app grow.

Even structurally, your design system will likely have a spectrum of component size. Spanning from Atomic elements to templates and pages, as Brad Frost recommends.

But, likely at the start, a few CSS variables with semantic colors and sizing are a good place to begin.

How To Use and Implement

Routes to take include off-the-shelf open source frameworks, customizing those as you go along, developing a component library — the options are plentiful, but many folks point to starting with a template and building from there.

Dave Rupert, deep in the trenches of design system implementation, actually brings up an interesting point about considering these design systems as "Tools Not Rules." As human beings, we want to stretch our design chops. If you're a front end focused dev, you likely get satisfaction from crafting the design yourself to some extent.

On a smaller project and a small team at the start, it's fairly easy to keep freedom within using a system, I believe.

At the end of the day, similar to a framework, it's meant to suit the user. Your team will decide the frameworks used, to what degree of customization, and even how technically proficient you need to be to use the system.

Pratchett Had Libraries, We Have the Internet

The internet is a special place for people who love to create. Sure, you get access to humanity's entire collective knowledge, online shopping, and memes. But I think the secret sauce of what makes the internet a net positive is in how it nurtures and inspires creative communities.

Before it, there were libraries.

I'm reading Author Terry Pratchett's biography. Growing up, he unsurprisingly was absorbed in books and practically lived in his local library. At an early age, Pratchett was marked as a below average student. Through books and the library, though, his creativity and talents flourished.

From the biography, on how it all began at the library -

And, again, it all threaded back to Beaconsfield Library and those string-tied volumes, pushed across the desk. That this 'twit and dreamer' (Terry's phrase again) who found possibilities for himself in a library went on to become the author of books that themselves ended up in libraries, where they could be found by other twits and dreamers in search of possibilities for themselves... I would maintain that the circularity of this outcome satisfied Terry more lastingly and more deeply than any other of the outcomes of his professional success.

Guilty - these days my greatest influences are the ones I discovered online. Some new, some from growing up. And what drives me forward in continuing to make things is that I'm giving back to that online collection of work.

Of course, the internet is even more empowering because a much wider spectrum of creative work ends up on here.

If you're doing this, if you're writing/drawing/musicing/creating online, thank you. More than likely, you're inspiring someone that just needs a model. Someone doing their thing, showing other people that what they want to make can be made.

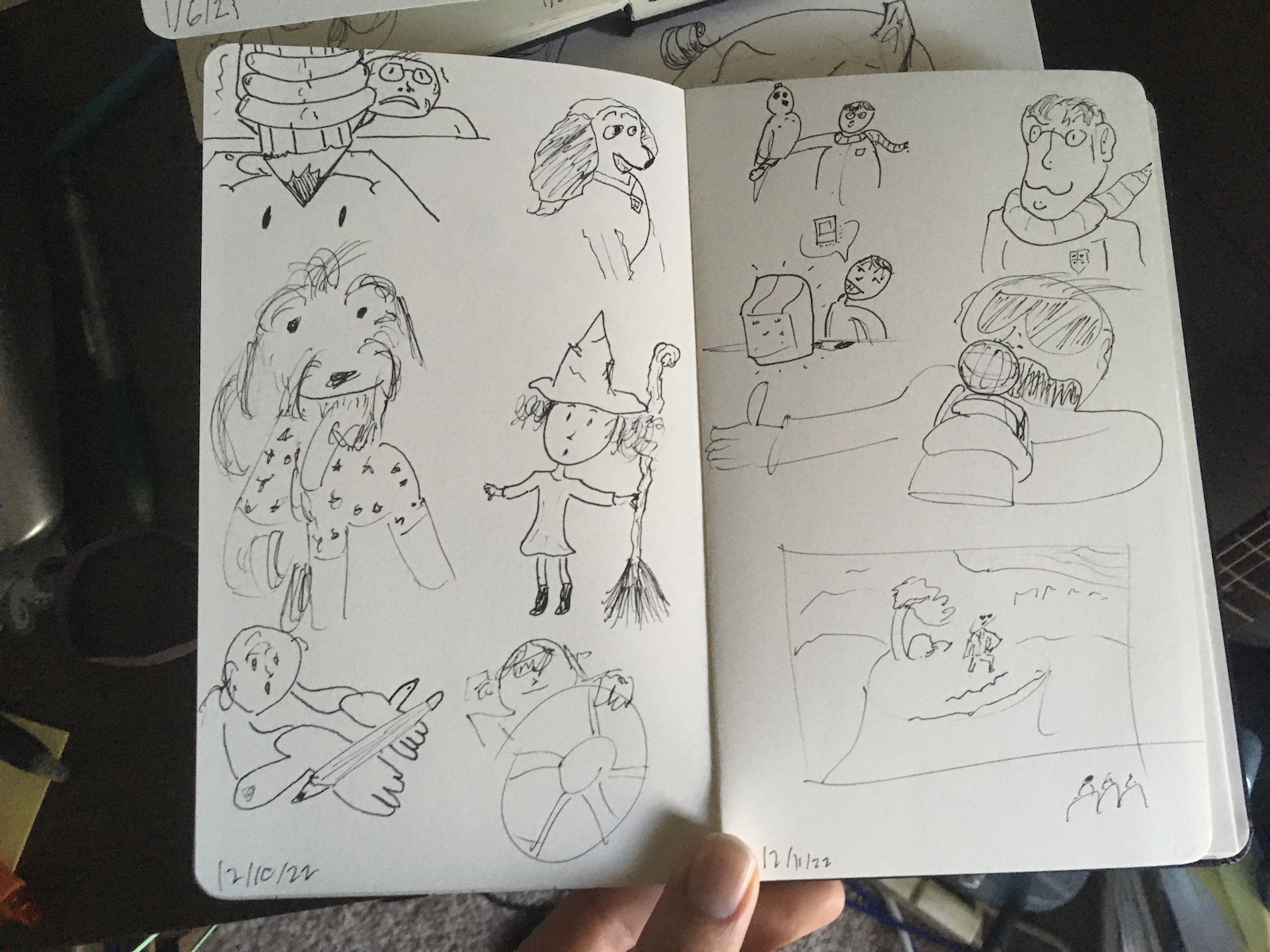

Finishing a Sketchbook

I reached a funny spot creatively in September. I was making lots of things, but they were all purely digital. Each day also finished with "well, I'm in the process of making something. Not much to show today, though!" There's something that felt a little incomplete about having large creative projects, but no small ones.

A couple of funny intersections happened. I listened to Austin Kleon talk about the benefits of journaling and I picked up reading a few of my favorite art blogs again.

So to fill the gap, I got inspired to sketch everyday in these guys:

And it's been excellent!

Three months later and I filled the last page of the moleskin!

It's a wildly satisfying feeling — one that's not quite recreated on the internet with paginated content and infinite scroll. A website is never full. But a completed sketchbook is a physical, in-your-hands unit. A way of marking time and space while also messing around!

I love making things digitally. But Just like music, keeping a tactile craft going is all the more warm and satisfying. Playing piano and guitar help balance music production. Writing by hand balances blogging. Sketching in a journal is part of that balance, too!

It's best kept largely private to keep it free, but I'll indulge a little! Here are a few of my favorite pages:

Greensleeves

I know I'm not the only one that keeps Christmas decorations around well into the New Year.

Blog Tags

At the end of the year, I noticed a few threads in my blog. Namely tech articles, but a few categories I want to keep following: Books, Art, and Music.

So I'm creating those buckets through tags.

Several blogs have them, but it's Rach Smith's use of a few main themes that inspired me.

I've heard somewhere that tags largely are more for the author than the reader. How often a visitor filters by tags (or even digs deep in the archive, as Alan Jacobs noted) is pretty minimal.

I think there's still value in saying "Hey, I write about this stuff, here are some thoughts on these things."

But I'll agree, probably it's more for me! When I have a bucket, I like filling it. So, keeping Art and Music buckets will lend to more long term thoughts in those categories, even if this space is largely for technical articles.

Besides, I like the colors.

Setting Up End-to-End Testing with Cypress

After musing on the design benefits of testing, I'm rolling up my sleeves! I'm diving into setting up Cypress with my blog.

This was also inspired by a message I received lately about my RSS feed being down! After hastily writing a fix, I wanted to start setting up measures to keep a major bug from flying under the radar.

Cypress

A post for another day is the different types of tests in software. The quick run down, from atomic to all-encompassing, goes like this: Static Tests, Unit Tests, Integration Tests, and End-to-End Tests.

Cypress is on the farther end of that spectrum, encompassing End-to-End testing. Cypress will spin up a browser, walk through your application, and use the site as a user would.

There's a suite of tools and assertion methods included from the popular Mocha and Chai testing frameworks.

For my blog, I don't have many complex features. It's largely a static site. If we were to define the happy path, it would mostly entail clicking links and reading articles. So asserting that will be as simple as gathering links and checking their status codes on request.

Cypress setup is laid out nicely in their docs. For a few more handy utility methods, you can also install the Cypress Testing Library:

$ npm i -D cypress @testing-library/cypressNote the -D flag. For both Cypress and any testing packages, you'll want to save these as Dev Dependencies. We're not planning on shipping any of these modules with the app, so it's an important distinction.

Writing Tests

After initializing the app with the following command-

npx cypress open—Cypress will lay out boiler plate files and directories for the app. I'm going to add a cypress/e2e/verifyLinks.js file for my own tests. Here's what it will look like:

import { BASE_URL, BASE_URL_DEV } from '../../lib/constants';

const baseUrl = process.env.NODE_ENV === 'production' ? BASE_URL : BASE_URL_DEV;

describe('RSS', () => {

it('Loads the feed', () => {

cy.request(`${baseUrl}/api/feed`).then((res) => {

expect(res.status).to.eq(200);

});

});

});To break down a few of the testing specific methods:

A simple example, but once this is up and running, it will verify that the request is indeed returning an "OK" status, and we can rest all the more easily tonight.

And that's enough to get started! Next steps would be to write a few more critical tests. Perhaps crawling the page for links and verifying that they are all returning 200. And with more tests, integrating into a CI workflow would make the app all the more secure without as the manual checks in our local environment. More to come!

Font-Display and CLS

Overview

I've written before on how tricky it can be dealing with external fonts and maintaining a healthy CLS score. This week, I had the chance to revisit it with the suggestion of exploring the particular load settings.

The Fastest Google Fonts

Below I'm snipping Chris Coyier's sample from Harry Roberts' amazingly thorough analysis on font performance. The idea is that you can render text immediately and load fonts quickly by adding preload and preconnect link attributes to your sources.

<link rel="preconnect"

href="https://fonts.gstatic.com"

crossorigin />

<link rel="preload"

as="style"

href="$CSS&display=swap" />

<link rel="stylesheet"

href="$CSS&display=swap"

media="print" onload="this.media='all'" />In my use case, this is exactly what was already in place.

Variables - Preload and Font-Display

Breaking down the different parts, we have a few variables to play with:

Preloading does not block, but marks the asset as a high priority and schedules it early on in the page lifecycle. More details available on MDN

Preconnect is similar. It lets the browser know that resources will be needed from this source early on.

As per Google's Definition:

Block, the browser default, leaves a 3 second window for the font to be fetched and loaded before "inking" the text. (Text is rendered, but in "invisible ink")

Swap renders text immediately and then swaps the font style on load.

Experimenting

Even with preloading and preconnecting in place, there's still enough delay where the CLS score was catching the "swap" on the page.

So I toyed around with the above variables, mixing and matching preloading with block and swap.

Here's what I found: There was a noticeable drop in both of the options that use the font-display: block; setting! From a 0.003 range to a 0.002 range on page with mostly hero and navigation text.

Of course, there's a tradeoff: The CLS score here is improved as a result of delaying text rendering. It's only a 3 second window, but it's common practice now to be aiming for 2 second load times for webpages. At the very least, in this case, a backup font is loaded after that time elapses.

I'm waiting to hear back on what the field data reports with this change, but I'm hoping this is a move in the right direction!

Testing Software for the Same Reason You Write Notes

My work as of right now isn't largely Test Driven. The scale I work at isn't as dependent on tests, the codebases are small enough where I'm not as concerned about glossing over any unwanted side effects.

However, I can't deny the benefits of testing.

Of course, having tests in place help solidify the sturdiness of your application. There's a certain reassurance that comes with shipping code that is backed by working tests. Then also while developing, there's a much tighter feedback loop for the coverage areas of your code when refactoring.

Dave Thomas and Andy Hunt in The Pragmatic Programmer make a much more interesting case: Writing tests leads to writing better code. The gist being that testable code is well-organized and thought-out code.

I think, largely, there's a parallel here with writing blogs/articles/notes as a mode of thinking.

I mentioned in my 2022 reflection that "Articles help solidify knowledge." Put another way, they bring an organization to the otherwise sloshy thought process that our human brain operates under.

A quote of a quote: Chris Coyier links Mark Brooker on the Magic of Writing with this gist:

I find, more often than not, that I understand something much less well when I sit down to write about it than when I’m thinking about it in the shower. In fact, I find that I change my own mind on things a lot when I try write them down. It really is a powerful tool for finding clarity in your own mind.

So here's my understanding:

Even if you aren't dependent on the tool of running tests locally and in a CI/CD integration, there are still design benefits to writing tests. Code that doesn't have tests may not be testable, which lends itself to a more interdependent design rather than a modular one.

2022

I'm usually highly preoccupied with today and tomorrow — I easily forget yesterday. So, I'm going to counter that by being intentional here about taking a pause, reflecting back on what's been a really exciting year.

Software

I released a video game with my sister! It was a big stretch, it was fun, it was a real challenge at times, and it was ridiculously rewarding! I'm proud of us for making something so expansive. And I'm ready to work on a much smaller and more divergent scale.

I'm continuing the cycle of learning, building, and serving at AptAmigo! Nothing but good there still, I'm very excited to continue contributing!

My Personal Site

This one! I'm happy to have launched my site this year. I've really enjoyed dipping into the codebase with new feature ideas. This site has been a fun sandbox! There's also something to be said about having a home on the internet. Maybe that's a bit too romantic, but I enjoy having a sort of stage for all kinds of creative projects. A paradoxically intimate and wildly public one.

Writing

This year I wrote 51 articles on my blog. Most of them technical, a few on creativity, some on books, and a handful just for fun.

If you've had the pleasure of writing or creating on the internet, you know the intrinsic value of it. I'm so happy to find that the reward of a digital garden is in the tending. Articles help solidify knowledge, they give platforms for exploring, and it's a handy way to help others with their problems by sharing what I learn. I know many people say that the benefit of writing on the internet is the connections between ideas that come over time, and I'm excited to start seeing those insights come together!

Music

I wrote about how it was surprisingly hard to get started writing music. Even though I've performed for most of my life, writing music had been a dream for just as long. This year I broke through the atmosphere! I have been writing and making music all year. I've learned guitar, piano, and basic DAW skills. With 12 albums out and a few more on the way already, I'm really excited to continue exploring this new vehicle of expression.

Books

I tried not to read so much this year and failed wonderfully. I dipped into books on coding, creativity, personsal psychology, and fiction. You can see what all I read over on My Reading Year.

Dallas

Miranda and I moved to Dallas this year! We both love it here so far! We're not entirely sure where we'll be in a few years, but it's safe to say we're happy to still be in Texas and in a place where we have friends both old and new.

I Turned 30

Now halfway through my first year of a new decade, I can distinctly feel the difference between phases of life. IYKYK. If you don't, I wrote a few lessons from my time in my 20s.

2023

These days, I'm wary of specific, long term goals. But I do have horizons I'm stepping towards. And honestly, they look a lot like the paragraphs above! More music, software, connection, and writing.

I'll see you there!

Hosting an Express Rendered React App on Elastic Beanstalk

I'm picking up the story from this article on moving my old projects from Heroku to AWS. Previously, I covered how to setup an Express app in Node for deployment to Elastic Beanstalk. This time, we're going to look at wrangling in a React app served from an Express app all in one application for Elastic Beanstalk.

Config Files

There are a few config files we need to set up for production on Elastic Beanstalk:

proxy.config

When setting up our Express App to serve React, our server port may have been 4000 or 5000. But when setting up an Express App for Elastic Beanstalk, we used 8081, the port reserved for HTTP calls, in our CRA proxy. We did this to avoid the Nginx 502 status code. So here, my React proxy will utilize that same port:

// client/package.json

{

...

"proxy": "http://localhost:5000/",

}There's a bit of murky documentation and understanding in this realm, but there's another way to adjust this for production through a .ebextensions/proxy.config file. If you want to run port 4000 for the server in local development and configure your application to route to the same port in Elastic Beanstalk, you can do just that.

My solution required both setting my port to 8081 and including the proxy.config file, though my understanding is you should be ok with just one or the other. In my case, I was able to test locally with the port set to 8081, so I had no qualms using it, though I know it's meant to explicitly stay open for other purposes. This portion will be a chose your own adventure!

You can follow this AWS doc on setting up the poxy.config with this added line:

// .ebextensions/proxy.config

files:

/etc/nginx/conf.d/proxy.conf:

...

content: |

upstream nodejs {

server 127.0.0.1:8081;

keepalive 256;

}One other portion is important for serving React static files: creating an aliast for our build folder.

By default, aws will search for static files at the root. The path will look like this:

/var/app/current/static;Our's will be nested within our application, though. So we'll want to add this adjustment as well:

location /static {

alias /var/app/current/client/build/static;

}Procfile

Supposedly, Elastic Beanstalk knows to use npm start by default when working with a Node environment.

Continuing with the murky documentation from earlier, this wasn't the case initially when I first uploaded the project. Adding a Procfile seemed to move the needle for me. It's a simple addition to the root of the project:

// Procfile

web: npm startYMMV here, most dialogue around this actually pointed to this as an outdated solution. It may work for your case, however, as it did for me!

Deploy!

Just as before, you should be set to deploy either through the EB CLI or from the AWS Console. This guide mentioned previously will get you through the console.

And that's it! We're set to work with React and Express side-by-side within one host in the cloud!

Claude Code So Far

Rendering a React App from an Express Server

I'm revisiting my older portfolio pieces from a few years ago with a new understanding. One of my favorites is a MERN stack app where React is served from the Express server. Here, I'll share the birds-eye view on setting up these applications to be served from the same host.

Why One App?

The common way to serve a stack with React and Express is to host them separately. Within the MERN stack (MongoDB, Express, React, and Node), your databases is hosted from one source, Express and Node from an application platform, and your React app from another, JAMstack friendly source such as Netlify or Vercel.

The benefits of that are having systems with high orthogonality, as Dave Thomas and Andy Hunt would put it in The Pragmatic Programmer. With one application purely concerned about rendering and another handling backend logic, you leave yourself with the freedom and flexibility to swap either out without much fuss.

Segmenting the application in this way also allows you to serve your API to multiple platforms. You can support a web client as well as a mobile app with the same API this way.

However, it gets tricky if you begin to incorporate template-engine rendered pages from your express app in addition to a React application. This may be the case in a site that you want to optimize with templated landing pages, and that also hosts a dynamic web application following user login.

Following the curiosity of exploring the later option, I'm going to dive into deploying my Express application with a React app from the same application server.

File Structure

When starting from scratch, you'll want to initialize your express app at the root, and then the React app one level lower, within a client folder. It will look something like this:

.

├── .ebextensions/

├── modules/

│ └── post.html

├── client/

│ ├── build/

│ ├── public

│ ├── src

│ ├── package.json

│ └── package-lock.json

├── models/

├── routes/

├── modules/

├── package.json

├── Procfile

├── README.md

└── server.jsYou'll notice two package.json files. Be mindful of what directory you're in when installing packages from the CLI.

You'll also see two items specific to our AWS setup: Profile and .ebextension/. More on those later!

Proxy Express

In the React app, I have requests that use same-origin requests like so:

axios

.get(`/api/${params}`)

.then((movies) => {

const moviesObj = {};

movies.data.forEach((movie) => (moviesObj[movie._id] = movie));

this.setState({

movies: moviesObj,

});

})

.catch((err) => console.log(err));Note the /api/${params} URL. Since we're serving from the same source, there's no need to express another origin in the URL.

That's the case in production, but we also have an issue locally. When we run Express and App in local development, it's typically with these npm scripts:

// package.json

{

...

"scripts": {

"server": "nodemon server.js",

"client": "npm start --prefix client",

"dev": "concurrently \"npm run server\" \"npm run client\"",

}

...

}A couple of less familiar pieces to explain:

The --prefix client is simply telling the terminal to run this script from the client/ directory, since that's where our React app is located.

Concurrently is a dependency that does just that: allows us to run both servers simultaneously form the same terminal. You could just as easily run them from separate terminals.

Either way, we have an issue of React listening on port 3000 and our server on a separate port like 4000.

We'll navigate both the local development and production issue with a proxy.

All we need to do is add a line to our React package.json.

// client/package.json

{

...

"proxy": "http://localhost:5000/",

}This tells our react script to redirect any api calls to our server running locally on port 5000.

Serving React Static Files

We're in the home stretch! Now all we need to do is adjust our express server to render React files when requesting the appropriate routes.

First, build the React application.

$ client/ npm run build

You can set up a build method in your npm script to run this when you deploy the application. That will likely be preferable since you'll be making updates to the React app. For simplicity here, this should get us up and running, though.

Doing this will populate your client/build folder with the assets for your React app. Great!

Now to wire our Express app to our static files.

There are two approaches to do this between production and development, but ultimately the core approach is the same. We need to add these key lines near the end of our application:

// Server.js

...

// Serve Static Assets if in Production

app.use(express.static(path.resolve(__dirname, 'client', 'build')));

app.get('*', (req, res) => {

res.sendFile(path.resolve(__dirname, 'client', 'build', 'index.html'));

});

// Ports

...We're serving the static files from our build folder, and then any request that doesn't match any routes for our api higher up in the file will serve the index of our React app instead.

This will work fine in production when we configure our host to the same source. This, however, will override our concurrently running React app on port 3000 in local development.

To mitigate this, we just need to add a conditional statement:

// server.js

if (process.env.NODE_ENV === 'production') {

// Set static folder

app.use(express.static('/var/app/current/client/build'));

app.get('*', (req, res) => {

res.sendFile(path.resolve(__dirname, 'client', 'build', 'index.html'));

});

}Easy! If in production, reroute requests to the index of our React app.

Wrapping Up

And we're all set! So we've covered how to allow Express and React to communicate from the same source. Next we'll look at how to configure this application for deployment to Elastic Beanstalk on AWS.