Chris Padilla/Blog

My passion project! Posts spanning music, art, software, books, and more. Equal parts journal, sketchbook, mixtape, dev diary, and commonplace book.

- Music Teachers Expand Dreams. On Seth Godin's "Stop Stealing Dreams"

- 250 Box Challenge Complete. Victory post after completing the drawabox namesake challenge.

- Creative Legacy. On originality and creativity.

- Folk Music. On creating online, my personal favorite.

- Verifying it's an art post (based on which folder I select to save the image to)

- Creating a file with the post date in the filename (I usually put them together on Fridays, and share on Saturday)

- Writing details from the command line, such as title, alt text, and post body.

- Look up the user in the DB

- Verify the password

- Handle Matches

- Handle Mismatch

- Verify with the previous framework/library the encryption method

- If possible, transfer over the code/libraries used

- Wrap it in a

checkPassword()function. - Designed to be secure by default and encourage best practices for safeguarding user data

- Uses Cross-Site Request Forgery Tokens on POST routes (sign in, sign out)

- Default cookie policy aims for the most restrictive policy appropriate for each cookie

- When JSON Web Tokens are enabled, they are encrypted by default (JWE) with A256GCM

- Auto-generates symmetric signing and encryption keys for developer convenience

Happy New Year!

Auld Lang Syne

2024 let's goooo!

2023

2023 has been a meaty year. In 2022 and the years just prior, I was planting several seeds. A few creative projects here and there, starting a new career, and making the move to Dallas. With 2023, much of the new is now integrating into my day to day life. A garden is growing!

From that garden, here were some of the fruits:

A Year of Working With the Garage Door Open

I time capsuled an intention at the start of 2023. In January, I wrote:

When I was a teenager leaving for college, I very ceremoniously made a promise to myself: Whatever I do, I want to be making things everyday.

I was inspired by internet pop culture, largely webcomics, artists, musicians, developers, bloggers, and skit comedy on Youtube.

Because of that, I had a dream as a kid. A weird dream - but my dream was to have a website. A home on the internet where I could create through all the mediums I loved - writing, music, art, even code!

Since I have this dot com laying around, my aim for this year is to use it! I plan to work with the garage door open. Sharing what I'm learning, what I'm exploring in drawing and music, what I'm reading, and what I'm learning through my work in software.

I can happily say: mission accomplished! Counting it all up, 205 posts made it on the blog this year. Not quite 365 days, but not bad. :)

But the volume isn't really the point. The joy is in the making. And it's been a thrill to be lit up to make so much this year!

So, while I'm not hitting a fully-algorithm-satiating level of daily output, I'm pretty ok with what's making it up here! Next year, I'm even planning to scale back. Making things everyday, but sharing when I'm ready. I want to fully honor the creative cycle, leaving in space for resting between projects.

Art

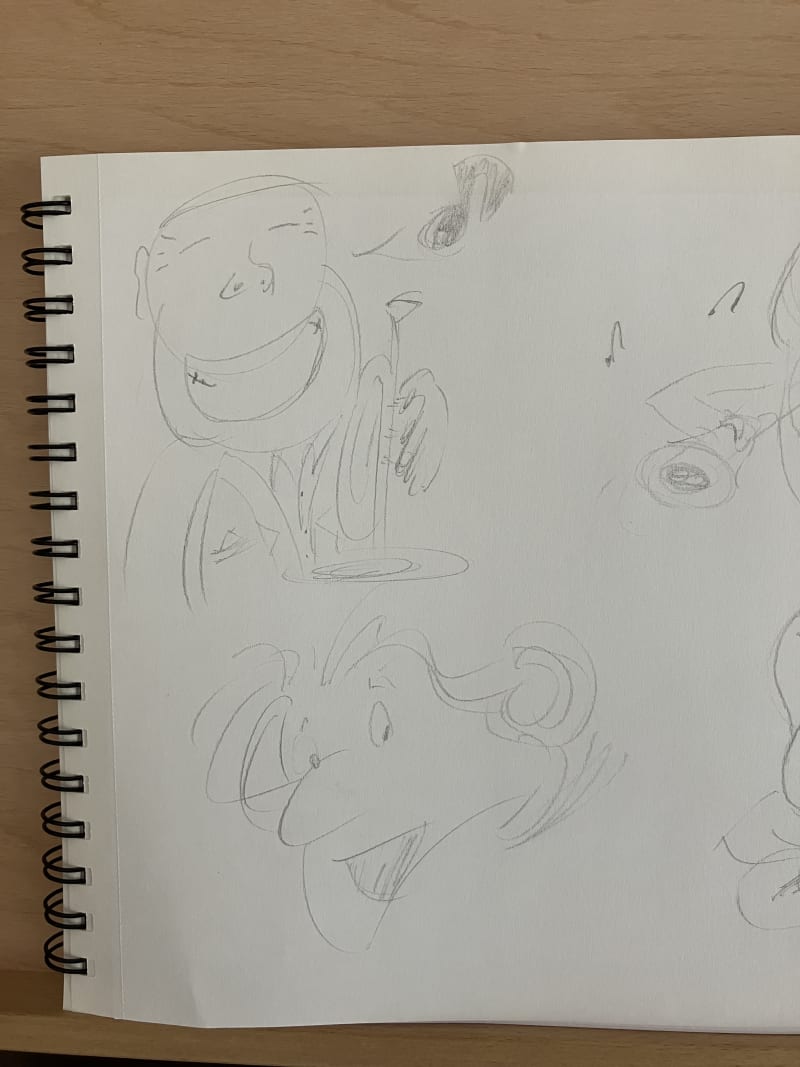

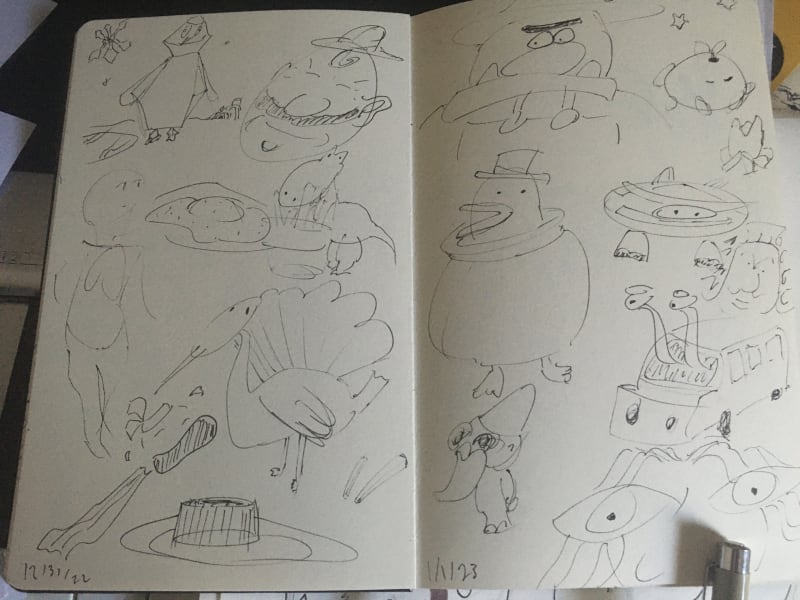

I drew! A LOT! in September of 2022 I committed to drawing and learning some technique. This year I stuck with it, finding time everyday to sketch.

In 2022, it was life changing to be making music regularly - having a new voice to express through. I realized that you don't have to have "The Gift" to compose, you just do it!

Same is true for art, and it's been equally life changing!

With music, I've always been learning to listen, but composing and improvising taught me to listen to intuition. The same thing has happened through drawing. I've been really intentional about making time to just doodle everyday. Not worrying about the end result, I just wanted to have an idea and get it out the page. Being able to do that with drawing is a game changer – to see an image and then at least have a rough idea of it on the page is like nothing else!

I have to say, music writing is fantastic because it expresses what otherwise is really difficult to express. It's almost other worldly. Art is thrilling, because it can capture things that do exist, or things that could exist - in a vocabulary that's part of the daily vernacular: shapes and color.

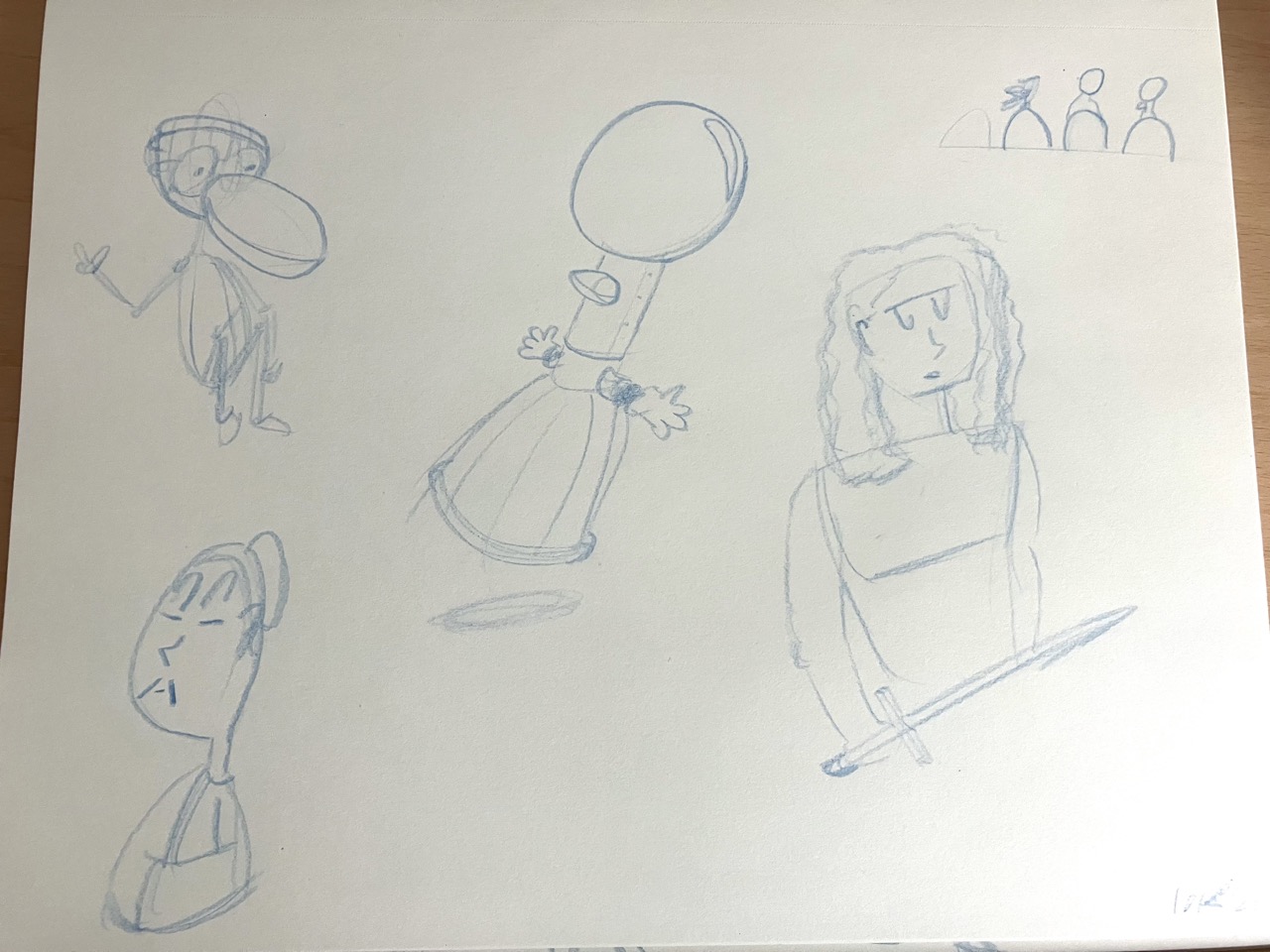

Once you know the building blocks - anatomy, spheres, boxes, cylinders - and you can build with that... the real fun comes with drawing something that isn't in front of you. True invention!

Even when drawing from reference, especially drawing people, there's something so satisfying about capturing life, just from moving the pencil across the page...

So, yeah, I kind of love drawing now. You can see what I've been making on my blog and read about a few more lessons learned from drawing.

Music

Picking up art, I had this worry that I wouldn't have any juice left for writing music. I'm thankful that the opposite has been true!

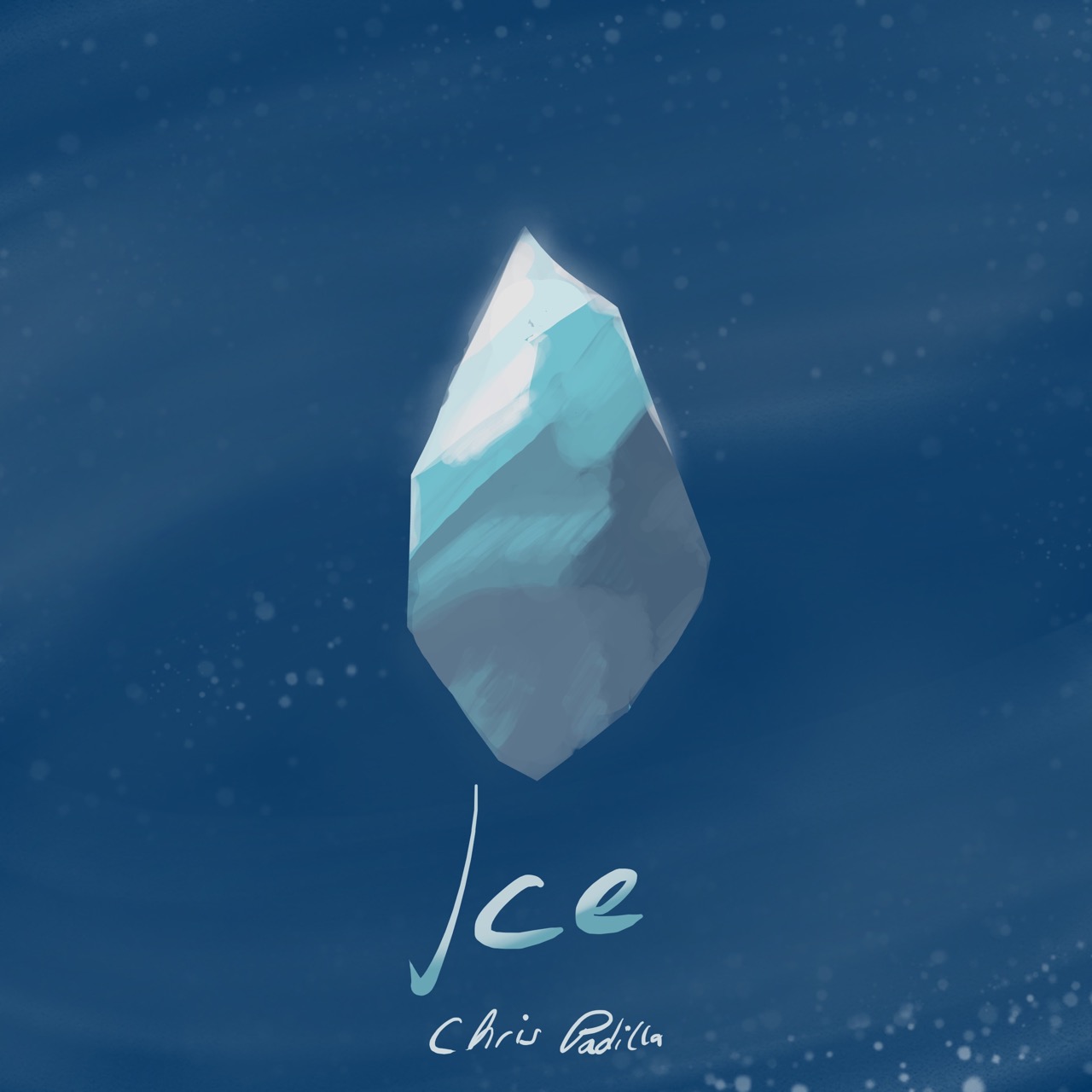

I wanted to up the ante by recording acoustic instruments more than I did in 2022. And I managed to for Cinnamon, Spring, Sketches and Meditations! I have fun with pure music production projects like Forest, Whiteout, Floating, Ice. But, having spent a couple of decades on an acoustic instrument, I have a soft spot for making the music with my own hands!

This year, I played lots of piano, got a classical guitar, listened to a wide variety of music (according to Spotify, mainly chill breakcore, math rock, and J-Ambient.)

I love my new nylon string guitar, I spend a lot of time simply improvising melodies on it. Learned finger style, played some classical, and spent a lot of the year demystifying the neck of the guitar.

I may be crazy for thinking this, but I'm really interested in learning jazz piano before spending more time on jazz sax. So I spent some time reading a few standards and some simple blues improv.

A fun year for music, all in all! Looking to do more jazz in 2024!

Tech

My second full year in software! I've learned tons on the job and am absolutely proud to work with the team I'm on. No better place to be learning and making alongside really smart and driven folks!

This year, I had the intent of really exploring in my spare coding time. By the start of the year, I was really comfortable with JavaScript development. I wanted to further "fatten the T," as it were. Explore new languages, investigate automated testing, and get familiar with cloud architecture. A tall order!!

I started the year with testing, first understanding the philosophy of TDD, taking a look at what a comprehensive testing environment looks line in an application, and then getting my hands dirty with a few libraries. All of those articles can be found through the testing tag

From there, I was itching to learn a new language. One of my previous projects was with a .NET open source application, and I was curious to see how an ecosystem designed for enterprise functioned. So, on to C##! I learned a great deal about Object Oriented Programming through the process, dabbled with the ASP.NET framework, and deployed to Azure. You can read more through the CSharp tag on my blog

As if one language wasn't enough, I dabbled a bit in Go towards the end of the year. Now having an understanding of a server backend language, I was curious to see what a cloud-centric scripting language looked like. So I did a bit of rudimentary exploring for fun. Take a look at the Go tag for more.

Honorary mention to spending time learning SQL! More through the SQL tag

We'll see where the next year goes. After having gone broad, I'm ready to go deep. While I know Python, I'm interested in getting a few more heavy projects under my belt with it as well as further familiarizing myself with AWS.

Reading

I spent time making reading just for fun again! In that spirit, 2023 became the year of comics for me. I still read plenty of art, music, and non fiction books this year. You can see what my favorites were on my wrap up post.

Life

Dallas is great! Seeing plenty of fam, Miranda's making it through school, and I'm playing DnD on the regs with A+ folks. Can't ask for much more!

2024

My sincere hope for next year is to be open to what comes next. I like what's cooking and I'd love to see more, but the real thrill of this year was in exploring so many interests. We'll see! Happy New Year!

Lessons From A Year of Drawing

This year I drew! A LOT! in September of 2022 I committed to drawing daily and learning some technique. This year I stuck with it, finding time everyday to sketch.

A year ago, it was life changing to be making music regularly - having a new voice to express through. I realized that you don't have to have "The Gift" to compose. You just... do it!

Same has been true for drawing, and it's been equally life changing!

A Space to Nurture Intuition

With music, I've always been learning to listen, but composing and improvising taught me to listen to intuition. The same thing has happened through drawing. I've been really intentional about making time to just doodle everyday. Not worrying about the end result, I just wanted to have an idea and get it out the page.

Being able to do that with drawing is a game changer – to see an image and then at least have a rough idea of it on the page is like nothing else!

I have to say, music writing is fantastic because it expresses what otherwise is really difficult to express. It's almost other worldly.

Art is thrilling, because it can capture things that do exist. Or things that could exist - in a vocabulary that's part of the daily vernacular: shapes and color.

Once you know the building blocks - anatomy, spheres, boxes, cylinders - and you can build with that, the real fun comes with drawing something that isn't in front of you. True invention, and it can be accomplished in just a minute if it's a sketch.

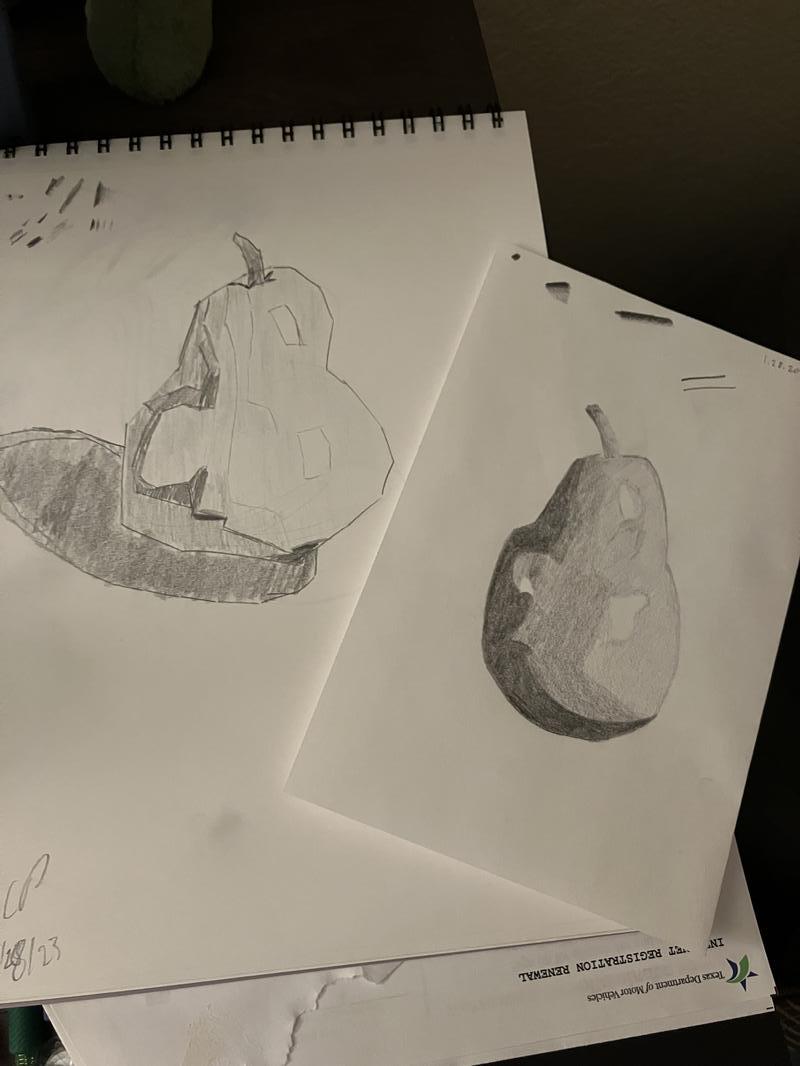

Learning to See and Loving How You See

Even when drawing from reference, especially drawing people, there's something so satisfying about capturing life, just from moving the pencil across the page.

Miranda and I spent a few nights this year throwing reference images up on the screen and drawing together. Miranda took some art classes in school, and has art parents for parents, so there's a little more technique for her to work with. But the most stunning thing about drawing alongside her is what she sees and what comes through in her drawings.

If we put aside the difference in technique and experience, my drawings are largely contour and shape driven, while Miranda's are tonal and value driven.

I love that contrast! Not to say that we won't incorporate either in our own drawings, but it's stunning to me that Miranda's eyes are so highly attuned to the way light and shadow play in an eye or on a still life. These are details I simple gloss over typically.

And all the while, when I look at drawings I admire, I find shape and line very exciting! I'm interested in the directionality and energy of motion.

Whichever the focus, drawing is a way of slowing down and paying attention to what's in front of you. It's a way of celebrating life and soaking in the details.

Even more so, as our comparison demonstrates, drawing is a celebration of how you see. This is inherent even in figure drawing, where you're commonly advised to "push the gesture" beyond the reference, exaggerating a stretch, bend, or twist to further capture the spirit of a pose.

Almost more interesting than the subject to me is how people can see the same references and chose to highlight different qualities in the image.

Keeping It Light

Once I started learning some technique, one of the biggest challenges in my drawing was to take time to just doodle. To essentially "improv" on the page.

When I got started, I could do it all day. And some of my first posts show that I had no trouble here, no matter the result!

But once you start learning about right and wrong, good and bad, staying loose gets tricky. Yet, it's essential — for experimentation, for practice, and most importantly for me, for keeping it fun!

Two things helped: Exposure therapy and automatic drawing. Forcing myself to draw no matter the result helped me realize that nobody died if I quietly drew something poorly in my sketchbook, never to be seen by anyone (shocking, I know!) And, on the days where coming up with anything to draw was challenge, falling back on automatic drawing helped me reset the bar down low. On those days, usually something more solid formed, like flowers or an idea for a character drawing.

So Yeah

I kind of love drawing now! I'm excited to keep exploring.

A woman saw me doodling a couple months ago and payed me the kindness of a compliment, followed with "That's so impressive, and looks really hard!"

I told her what I'm so excited to tell anyone who will listen: Anyone can do this.

If a guy like me, who's just been goofing around on this for a year, can draw and reap a lifetime of satisfaction from it – so can you!

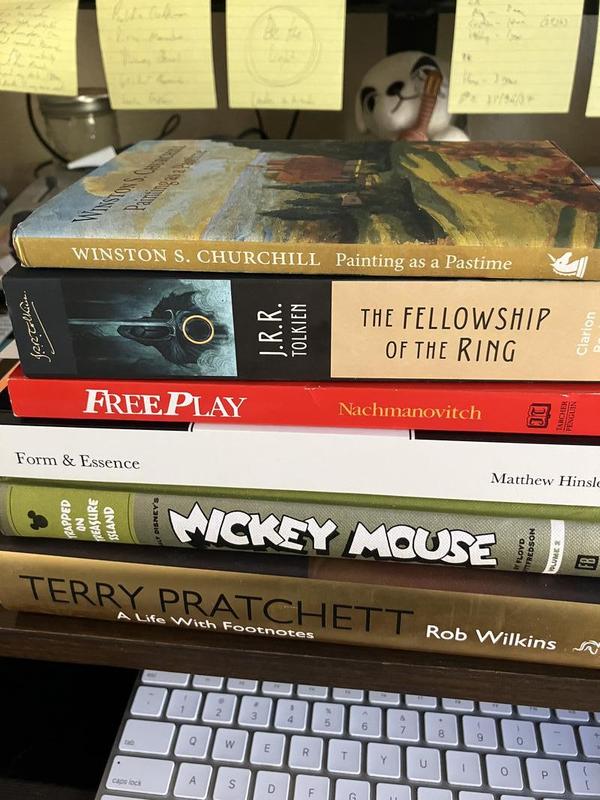

My Reading Year, 2023

From "The Black Swan" by Nassim Nicholas Taleb, Via Anne-Laure Le Cunff:

"The goal of an antilibrary is not to collect books you have read so you can proudly display them on your shelf; instead, it is to curate a highly personal collection of resources around themes you are curious about. Instead of a celebration of everything you know, an antilibrary is an ode to everything you want to explore."

I discovered the idea of an antilibrary this month from artist Jake Parker's excellent blog and newsletter. That feels more true than ever before for me. I have a pile of books I haven't made it to yet this year that won't be on my list (the true antilibrary!) And those that made it into my hands and onto this list very closely track what I've been working on, learning, and dreaming about this year.

I'm historically a pragmatic reader – spending hunks of time on self help. My 2022 reading year is a good representation of that.

This year, instead of saying I'll cut my reading all together, I spent time making reading just for fun again! Lots of comics fulfilled that, a few music books helped me play at the instrument, and some of my non fiction reading helped me look at my work and day to day more playfully.

Favorites

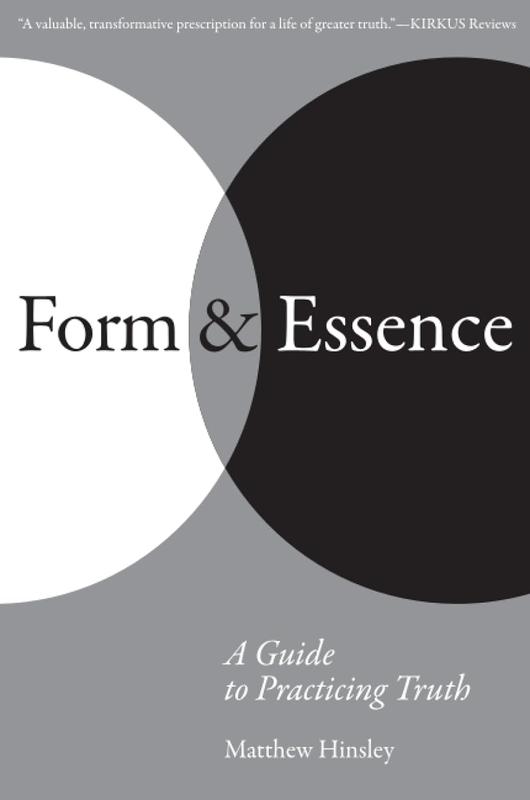

Form & Essence

I took an arts admin class with Matt back at UT. Matt is such an amazing embodiment of the servant leader, and this book feels like I'm getting to take the best bits of his class all over again. I liked it so much, I said these words about it on Goodreads:

"Matthew Hinsley, with a musician's sensitivity, brings the magic of balancing both the tangible and the spiritual off of the artist's canvas and into our daily lives. Form & Essence is an antidote to the day-to-day focus on what's quantifiable. Without asking the reader to forego the material, Hinsley warmly invites the reader to look beyond at all at the things that truly matter — relationships, storytelling, and building a life around character. This book feels like a wonderful blending of Stephen Covey and Julia Cameron — fitting for the subject of the book. But Matt's own generosity and personality shine through clear as day. And his is a personality worth being with in its own right. Even if you only spend a bit of time with a few chapters, I can guarantee that you will see the world differently: with more lightness, with an eye for deepening your connections, and a gravitation towards the seemingly small things that truly matter in life.”

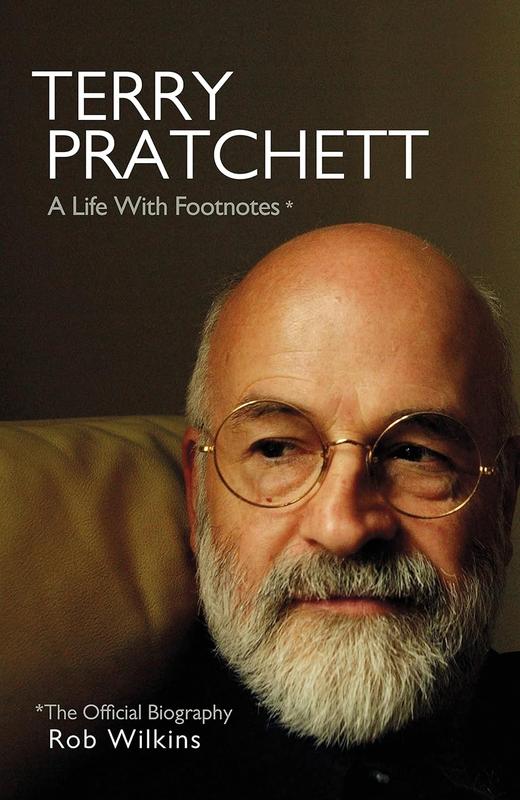

Terry Pratchett: A Life With Footnotes by Rob Wilkins

A humorous, authentic, and a heartfelt account by Rob Wilkins. I'm glad to have discovered that the author behind Discworld was just as colorful and down to earth as his stories. Further proof of how prolific and brilliant a writer Terry was. Rob's account of Terry's decline towards the end of his life was absolutely heart-wrenching. Though, as the book concludes, "Of all the dead authors in the world, Terry is the most alive." I had the pleasure of writing a few words after reading on inspiration and libraries.

Free Play: Improvisation in Life and Art

"The creative process is a spiritual path. This adventure is about us, about the deep self, the composer in all of us, about originality, meaning not that which is all new, but that which is fully and originally ourselves." A multi-faceted look at the act of making in the moment. Turned my impression of improvisation on its head by acknowledge that even the act of design and iteration is just as improvisatory as riffing off the cuff!

Fellowship of the Ring

My first read through!! I bopped between physical and audiobook. Rob Ingles is phenomenal, but the scene painting is so lush, I ended up wanting to sit down and take it all in with the book in hand. Warm characters, a fantastically grand adventure, and a world so fully realized, I'm not at all surprised to see how this remains a timeless classic. Rob Ingles reading and singing the audiobook version is the way to experience this.

Walt Disney's Mickey Mouse "Trapped On Treasure Island" by Floyd Gottfredson

Stunning adventure comics! I devoured the second volume early this year. I can't believe these were printed in the newspaper. The detail in the sets, the grand nature of the story — these strips culminate in a rich novel! Floyd Gottfredson was a major inspiration for Carl Barks, and it's easy to see why. Included are really nice essays giving historical context to these stories as well.

Painting As a Pastime

Thoughts on hobby making and painting from an enthusiastic hobbyist! Wonderful insight on the importance of keeping many creative pots boiling. It's also a beautiful love letter to the medium of color and light through painting. Very inspiring, the book motivated to keep planting my garden.

Music Books

Faber Elementary Methods. Just a superb elementary music method, I don't even care if it's written for 10 year olds! Engaging, perfectly paced, highly musical — I never feel overwhelmed and never feel bored. Each piece written by N Faber is such a treat. Combing all the supplementary books allows for a deep dive into each new topic. If you've never picked up an instrument and want to, my vote is piano and that you give these books a whirl.

Hal Leonard Classical Guitar. As someone who's played campfire guitar for the last few years, this book was really challenging. The pacing is very accelerated, with each piece taking a few leaps. The music is beautiful, but I wouldn't recommend this as a primary course of study.

Christopher Parkening Classical Guitar Method. This, on the other hand, is excellent! Similar to Faber, pieces build on one another and there's ample time to reinforce finger coordination in the left and right hand with several pieces. And for being so simple, plenty of the early pieces are so pretty. I'm not familiar with Parkening's playing yet, but his personal notes and the page spread photos are a nice touch to bring a personality to the journey.

Dan Haerle Jazz Voicings. The secret to jazz piano is using the puzzle pieces of conventional chord progressions and plugging them in. Dan Harle's book has the puzzle pieces. Knowing them makes playing standards WAY more fluid and fun!

Hal Leonard Jazz Piano Method by Mark Davis. Last year I enjoyed getting started with Mark Levine's Jazz Piano Book. Still being an early pianist, I needed something that was more my speed. Mark Davis' book fits the bill! Found early success reading lead sheets and improvising at the keyboard — an absolutely wild experience for a simple sax player like myself!

Art & Comics

Hayao Miyazaki by Jessica Niebel. Beautiful. Absolutely stunning selection of prints, stills, and write ups. An absolute must for any fan of these films.

Cartoon Animation with Preston Blair. I'll admit I haven't worked through the whole book just yet, but it's been a wonderful resource to help guide my practice. This won't necessarily teach you how to animate so much as give you a curriculum of what to work through with a few tips. For example, Blair touches on the importance of being able to use contraction in character development, breezes through topics on perspective, highlights what makes a character "cute" vs "screwball." All in all, a fantastic resource that's more of a road map rather than a step-by-step guide. Such is the way with most art books, I suppose!

Donald Duck The Old Castle Secret By Carl Barks. I wrote about Carl Barks earlier and this is my first intro to his cartoons. Hugely expressive, phenomenal storytelling, and plenty of genuine chuckles!

Calvin & Hobbes by Bill Watterson. WOW!! For a modern newspaper strip, these were so dynamic!! Compared to something like Garfield, it's so much fun to see how animated and expressive these strips were. The forward captures the spirit well: These strips are such a fantastic representation of what it felt to be a child, fluidly twisting between a colorful imagination and the world as is.

Cucumber Quest by Gigi D.G. . I missed this when it was in it's hey-day! The art here is stunningly gorgeous, the color design and beautiful locales keep me from turning the page and reading the dang story! The attitude of the series is very 2000s internet culture, so the new adventure has a strong sense of familiarity.

Are You Listening? by Tillie Walden. I've picked this one up a few times this year. I adore Walden's colors. Like Calvin and Hobbes, it seems like there's a back and forth between traveling in a dream and on the open road in the real world. Except the line is much thinner and much more lush.

Sonic the Hedgehog 30th Anniversary Celebration published by IDW. I came for the McElroy feature, but stayed for the incredible art. (And, of course, I grew up on the games, so y'know.) Just look at some of these pages and try to tell me that these aren't excellently paneled, posed, and colored. An absolute treat.

Dragon Ball by Akira Toriyama. I forgot how plain old silly the original series was! Z may be action packed, but this was all gags. The best part of reading these is getting to take in Toriyama's inventive and detailed vehicle design.

Fullmetal Alchemist by Hiromu Arakawa. I tried getting into the anime a couple of years ago, but I think the manga is the way for me. Really exciting artwork, Al and Ed look so stylish on every page!

Non Fiction

One Person/Multiple Careers: A New Model for Work/Life Success by Marci Alboher. I needed this book to tell me I'm not crazy! A great collection of interviews and case studies from people who's work isn't easily bound to one job. Great read for anyone tinkering to find fully meaningful work across multiple interests.

Make Your Art No Matter What: Moving Beyond Creative Hurdles by Beth Pickens. Austin Kleon and Beth Pickens had a great talk recorded online about making creative work, and it lead me to her book. Favorite take away: Creative people are those who need their practice so they can wholly show up in all other areas of their lives.

How to Fail at Almost Everything and Still Win Big by Scott Adams. I reread this earlier this year and still think about some of the essays. General life advise mixed with Adams' life story, including his navigation through bouts of focal dystonia. Entertaining and insightful!

The Gifts of Imperfection by Brené Brown. I regularly return to these guideposts, though this time around, it was important for me to really spend some time with the opening discussion around shame. Even after years of Brown's work being in the cultural conversation, this little volume still opens up new insight on authentic living on re-reading.

Also

The Alchemist by Paulo Coelho. A last-minute read over the holiday break! Miranda and I both wanted to read this together, and we've loved it! A beautiful journey that illustrates how much richer the world is when we pursue our own personal inspirations and callings, grand or simple. I read this in high school, having practically no life experience to draw on for all of the metaphors. Surprising to me: reading it now, the terrain covered in the book is largely familiar.

Update: All books here have been added to my bookshelf, a dedicated page for all books read.

Odds and Ends

I wrote several articles for the blog this year! At some point, I had a few pieces complete, but not published. Eventually, I had a few too many creative projects spinning, and they fell by the wayside.

On the finished ones, I'm hitting the publish button, but am leaving the original date written. Here they are in list form so that these don't fall through the cracks. They make an interesting sample of where my head has been this year:

Merry Christmas!

Silent Night

Merry Christmas from our family’s oooooold piano!

Landscape Gestures

Generating Post Templates Programmatically with Python

I'm extending my quick script from a previous post on Automating Image Uploads to Cloudinary with Python. I figured — why stop there! Instead of just automating the upload flow and getting the url copied to my clipboard, I could have it generate the whole markdown file for me!

Form there, the steps that will be automated:

Here we go!

Reorganizing

First order of business is organizing the code. This was previously just a script, but now I'd like to encapsulate everything within a class.

In my index.py, I'll setup the class:

from dotenv import load_dotenv

load_dotenv()

import datetime

import cloudinary

import cloudinary.uploader

import cloudinary.api

import pyperclip

class ImageHandler():

"""

Upload Images to cloudinary. Generate Blog page if applicable

"""

def __init__(self, test: bool = False):

self.test = test

config = cloudinary.config(secure=True)

print("****1. Set up and configure the SDK:****\nCredentials: ", config.cloud_name, config.api_key, "\n")Beneath, I'll wrap the previous code in a method called "upload_image()"

I'll still call the file directly to run the main script, so at the bottom of the file I'll add the code to do so:

if __name__ == '__main__':

ImageHandler().run()So, on run, I want to handle the upload to Cloudinary. From the result, I want to get the image url back, and I want to know if I should start generating an art post:

def run(self):

image_url, is_art_image = self.upload_image()

print(is_art_image)

if is_art_image:

self.generate_blog_post(image_url=image_url)To make that happen, I'm adding a bit of logic to image_upload to check if I'm storing the image in my art folder:

def upload_image(self):

...

options = [

"/chrisdpadilla/blog/art",

"/chrisdpadilla/blog/images",

"/chrisdpadilla/albums",

]

is_art_image = selected_number == 0

...

selected_number = int(selected_number_input) - 1

is_art_image = selected_number == 0

...

return (res_url, is_art_image)That tuple will then return string and boolean in a neat little package.

Now for the meat of it! I'll write out my generate_blog_post() method.

Starting with date getting. Using datetime and timedelta, I'll be checking to see when the next Saturday will be (ART_TARGET_WEEKDAY is a constant set to 6 for Saturday):

def generate_blog_post(self, image_url: str = '') -> bool:

today = datetime.datetime.now()

weekday = today.weekday()

days_from_target = datetime.timedelta(days=ART_TARGET_WEEKDAY - weekday)

target_date = today + days_from_target

target_date_string = target_date.strftime('%Y-%m-%d')Sometimes, I do this a week out, so I'll add some back and forth in case I want to manually set the date:

date_ok = input(f"{target_date_string} date ok? (Y/n): ")

if "n" in date_ok:

print("Set date:")

target_date_string = input()

print(f"New date: {target_date_string}")

input("Press enter to continue ")From here, it's all command line inputs.

title = input("Title: ")

alt_text = input("Alt text: ")

caption = input("Caption: ")And then I'll use a with statement to do my file writing. The benefit of using the with keyword here is that it will handle file closing automatically.

md_body = SKETCHES_POST_TEMPLATE.format(title, target_date_string, alt_text, image_url, caption)

with open(BLOG_POST_DIR + f"/sketches-{target_date_string}.md", "w") as f:

f.write(md_body)

return TrueViolà! Running through the command line now generates a little post! Lots of clicking and copy-and-pasting time saved! 🙌

Angels We Have Heard on High

Taeko Onuki

New Album — Ice 🧊

Music somewhere between ambient classical and winter lofi!

Purchase on 🤘 Bandcamp and Listen on 🙉 Spotify or any of your favorite streaming services!

Credentials Authentication in Next.js

Taking an opinionated approach, Next Auth intentionally limits the functionality available for using credentials such as email/password for logging in. The main limit is that this forces a JWT strategy instead of using a database session strategy.

Understandably so! The number of data leaks making headlines, exposing passwords, has been a major security issue across platforms and services.

However, the limitation takes some navigating when you are migrating from a separate backend with an existing DB and need to support older users that created accounts with the email/password method.

Here's how I've been navigating it:

Setup Credentials Provider

Following the official docs will get you most of the way there. Here's the setup for my authOptions in app/api/auth/[...nextAuth]/route.js:

import CredentialsProvider from "next-auth/providers/credentials";

...

providers: [

CredentialsProvider({

name: 'Credentials',

credentials: {

username: {label: 'Username', type: 'text', placeholder: 'your-email'},

password: {label: 'Password', type: 'password', placeholder: 'your-password'},

},

async authorize(credentials, req) {

...

},

}),Write Authorization Flow

From here, we need to setup our authorization logic. We'll:

async authorize(credentials, req) {

try {

// Add logic here to look up the user from the credentials supplied

const foundUser = await db.collection('users').findOne({'unique.path': credentials.email});

if(!foundUser) {

// If you return null then an error will be displayed advising the user to check their details.

return null;

// You can also Reject this callback with an Error thus the user will be sent to the error page with the error message as a query parameter

}

if(!foundUser.unique.path) {

console.error('No password stored on user account.');

return null;

}

const match = checkPassword(foundUser, credentials.password);

if(match) {

// Important to exclude password from return result

delete foundUser.services;

return foundUser;

}

} catch (e) {

console.error(e);

}

return null;

},PII

The comments explain away most of what's going on. I'll explicitly note that here I'm using a try/catch block to handle everything. When an error occurs, the default behavior is for the error to be sent to the client and displayed. Even an incorrect password error could cause a Personally Identifiable Information (PII) error. By catching the error, we could log it with our own service and simple return null for a more generic error of "Failed login."

Custom DB Lookup

I'll leave explicit details out from here on how a password is verified for my use case. But, a general way you may approach this when migration:

Sending Passwords over the Wire?

A concern that came up for me: We hash passwords to the database, but is there an encryption step needed for sending it over the wire?

Short answer: No. HTTPS covers it for the most part.

Additionally, Next auth already takes many security steps out of the box. On their site, they list the following:

CSRF is the main concern here, and they have us covered!

Integrating With Other Providers

Next Auth also allows for using OAuth sign in as well as tokens emailed to the clients. However, it's not a straight shot. Next requires a JWT strategy, while emailing tokens requires a database strategy.

There's some glue that needs adding from here. A post for another day!